In the fast-paced world of software engineering and development, time is a precious commodity. With the increasing pressure to deliver high-quality software quicker, prioritizing test cases becomes critical to the software testing process.

In this piece, you will learn about the principles of test case prioritization, factors to consider when prioritizing test cases, techniques, and more.

What is test case prioritization?

Test case prioritization (TCP) means arranging which test cases your team runs based on their significance, functionality, and potential impacts on the software.

Prioritizing test cases ensures that QA executes the right tests at the right times. This could mean testing critical features first, using certain testing methodologies (like black-box testing) over others, running shorter tests before longer tests, or other tactics that allow you to surface risks faster and maximize your QA team’s time.

Test case prioritization applies to tests you run manually and with automation, although the way you prioritize your tests may differ slightly between them. For example, you may prioritize certain automated tests by scheduling them to run before others in your automated CI/CD build pipelines, while prioritizing manual tests (e.g., acceptance, exploratory, or other functional tests) may simply involve selecting those with the highest priority to run first.

Why is it important to prioritize test cases?

Prioritizing test cases and executing them in the most efficient order is essential to detecting bugs in your software as early as possible in the development life cycle.

Test case prioritization allows you to be more strategic with your testing and balance the time your team has to test — especially as the complexity of your application (and therefore the number of test cases) increases over time.

Although the prioritization of test cases is important regardless of your team’s development methodology, it is particularly important if your team uses an agile development process, where you have to start debugging as quickly as possible to keep up with the pace of releases.

Factors to consider for test case prioritization

As evidenced by the number of empirical studies and scholarly articles published by the IEEE around the topic “test case prioritization,” it can be an in-depth process involving sophisticated analysis, algorithms, and metrics like fault detection rate.

In practice, prioritizing test cases usually requires an understanding of your software, its critical functionalities, potential risks, and business priorities. By considering the following factors, QA engineers can improve their test case prioritization approach.

Identify critical functionalities in your software under test

As a QA engineer, it’s essential to understand the critical functionalities of the software you’re working on. These features are the most important to your users and the business. For example, in an e-commerce application, the checkout process is a critical functionality because it directly impacts revenue generation.

Assess Risk

Evaluating potential risks and their level of impact is crucial in test case prioritization. Assessing risk usually involves analyzing the possibility of an identified risk occurring and the potential implications the risk could have if it occurs.

One way to measure risk is to consider technical factors such as fault proneness, fault severities, and code changes to assess the potential risks associated with each test case.

Fault proneness is more than finding the number of faults. When prioritizing test cases based on fault proneness, priority is assigned based on how error-prone a requirement has been in previous versions of software.

According to the Association for Computing Machinery, “The fault-proneness of an object-oriented class indicates the extent to which the class is prone to faults.” Fault severity refers to “how much of an impact it has on the software program under test. A higher severity rating indicates that the bug/defect has a greater impact on system functionality.”

You can also assess risk more generally. Asking questions like, “How complex is the feature? How frequently is it used? And how critical is it? Can help guide your assessment. Consider how much a defect can affect customers. How visible is it? How difficult is it to fix it? Will this defect affect revenue, customer retention, or loyalty? Based on these questions, assign a value to the risk and impact of each test case (qualitative or numerical).

Align with business priorities

Keep customer requirements and preferences in mind when prioritizing test cases. For instance, if you launch a new feature to address a specific customer need, prioritize test cases related to that feature.

Test case prioritization should align with the overall business goals of the software development project.

Techniques for test case prioritization

Test case prioritization helps QA engineers identify higher-priority test cases and focus on those with the most significant potential for fault detection and risk coverage. Using the following techniques to prioritize tests can ensure that testing efforts focus on the most high-impact areas.

Risk-based prioritization

In the context of text case prioritization, risk refers to the probability of a bug occurring. The risk-based prioritization technique analyzes risk to identify areas that could cause unwanted problems if they fail and test cases with high risk are prioritized over those with low risk.

The following steps can help to guide your risk analysis:

- Ask yourself these right questions to help determine risk:

- How complex is the feature?

- How frequently is it used?

- How critical is it for functionality?

- How difficult is it to fix the defect?

- How much will the defect affect users?

- How much will the defect affect revenue?

- Address what the potential problems are and what the probability of each one occurring is.

Calculate the severity of impact for each problem.

History-based prioritization

History-based prioritization considers the fault proneness and fault severities of modules in the software to prioritize test cases. This approach uses historical data, such as previous test execution results and fault detection rates, to prioritize test cases. Using this approach, you would give test cases that have detected faults in the past or have a higher rate of fault detection a higher priority.

Requirements-based coverage

Requirements-based prioritization focuses on prioritizing the requirements whose test cases are the most critical or have a greater effect on your software’s functionality. With this technique, test cases are assigned priority levels, and higher-priority test cases (the most critical ones) are executed before lower-priority test cases.

Coverage-based prioritization

Coverage-based prioritization prioritizes test cases based on code coverage and ensures that the most critical parts of the code are tested first. This prioritization technique is most often used in correspondence with unit tests or automated tests. Some sub-techniques for coverage-based prioritization include:

- Total Statement Coverage Prioritization: Test cases are prioritized based on the total number of statements they cover. For example, if T1 covers 7 statements, T2 covers 4, and T3 covers 15 statements, then according to this prioritization, the order will be T3, T1, T2.

- Total Branch Coverage Prioritization: branch coverage addresses whether all or paths of execution in an application are under test. Within the context of test case prioritization, branch coverage refers to the coverage of each possible outcome of a condition.

Version-based prioritization

Version-based prioritization focuses on test cases related to new or modified code in the latest version of the software. It prioritizes regression test cases that target areas affected by recent code changes to ensure the stability of the software.

Cost-based prioritization

Cost-based prioritization considers the cost of executing each test case, such as execution time, resources, and test environment setup. With this approach, you prioritize test cases to provide the most significant benefits at the lowest cost.

Best practices for effective test case prioritization and maintenance

When done right, TCP maximizes the value the testing process delivers. Here are some best practices for effective test case prioritization:

Collaborate with developers and business stakeholders

Involve developers, QA engineers, and business stakeholders (if it makes sense to your organization) in the prioritization process to ensure a comprehensive understanding of the software’s requirements and risks. Increasing collaboration helps identify critical functionalities and potential issues that QA engineers must address during testing.

Establish clear communication

Establish communication channels among team members to share insights, concerns, and suggestions for test case prioritization. A shared understanding of the software’s requirements, risks, and priorities helps streamline the testing process and ensures everyone is on the same page.

Adjust priorities based on changing requirements

As software development progresses, be prepared to reassess and adjust your test case prioritization based on new information, such as code changes, bug reports, or changing requirements. Regularly adapt your test case prioritization to accommodate changes to ensure your test suite is up-to-date and your testing efforts align with the project’s goals.

Continuously evaluate the effectiveness of prioritization techniques

Regularly assess the effectiveness of your test case prioritization techniques, such as coverage-based, history-based, or risk-based approaches. Use QA metrics like fault detection rate, execution time, and the Average Percentage of Fault Detected (APFD) value to evaluate the success of your prioritization efforts and make adjustments as needed.

Prioritize test cases for regression testing

Regression testing ensures that code changes don’t introduce new faults or negatively affect existing functionality. As the test suite expands, it is important to prioritize regression test cases to maximize fault detection and minimize test execution time.

Focus on running regression tests around critical areas, risky areas, or key business drivers. It may also be important to run regression tests around areas where you have detected faults in the past such as areas in your application that rely on integrations of systems or areas that are particularly brittle.

Image: Organize your TestRail test case repository based on priority.

How to measure the effectiveness of a prioritized test suite

Measuring the effectiveness of your test suite is essential for determining the success of your prioritization, setting benchmarks, and making data-informed decisions. Here are three ways QA engineers can measure the impact of their prioritization efforts and take data-driven actions to improve the effectiveness of the test suite.

1. Define Metrics for Measuring the Effectiveness

To measure the effectiveness of a prioritized test suite, it is essential to define relevant metrics. Metrics to measure the effectiveness of a test suite include:

Test Coverage

Test coverage refers to how much of your application’s total requirements are covered by tests. If you have low test coverage, that means you have untested requirements—raising the probability of shipping code with undetected risks.

Test Coverage = (Total number of requirements mapped to test cases / Total number of requirements) x 100.

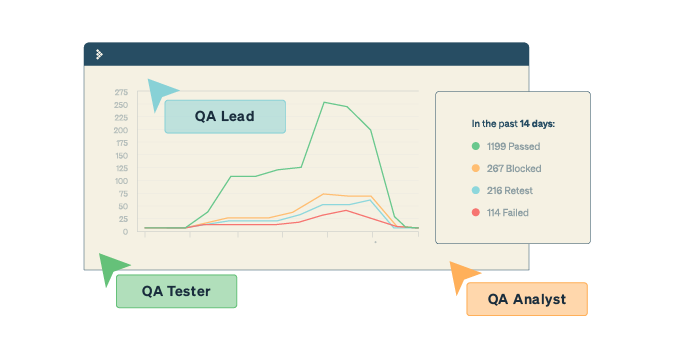

Image: View real-time metrics and statistics and generate advanced traceability and coverage reports like Coverage for References with TestRail.

Defect Detection Rate

The Defect Detection Rate metric measures the number of defects found during testing. A high defect detection rate indicates that the test suite effectively identifies defects.

Defects Per Requirement (Requirement Defect Density)

The Defects Per Requirement metric measures the number of defects found per requirement. This metric identifies areas of the application that require more testing and can reveal if specific requirements are riskier than others. This helps product teams decide if they should release those features.

Test Cost/Time to Test

These metrics measure the time required to execute the test suite. More specifically, time to test reveals how quickly a team or QA engineer can create and execute tests without affecting software quality. Better test case prioritization should lead to cost reduction, which is important when many QA teams have to work within specific budgets and keep close accounts of how much they plan to spend to justify budgets.

Change Failure Rate

According to the DORA report, the Change Failure Rate is a metric that determines the changes that lead to failures after they reach production or are released to end users.

2. Track and Analyze the Metrics

Once relevant metrics are identified and defined, it is essential to implement a process for collecting data on the defined metrics. After collecting data from your chosen metrics, it is essential to analyze it and evaluate the effectiveness of the prioritized test suite.

Here are some important considerations to keep in mind when analyzing QA metrics for test case prioritization:

- QA metrics are indicators, not absolutes.

- Don’t rely on a single QA metric to evaluate the quality of the product.

- Your QA team members should understand what metrics are being tracked and how they are calculated and measured.

- Focus your analysis on identifying areas of the application that require more testing and areas where you can reduce your testing efforts.

You can leverage a test management tool to help record estimates and elapsed test times, compare results across multiple test runs, configurations, and milestones, and receive traceability and coverage reports for requirements, tests, and defects.

To learn more about how TestRail can help you visualize test data in real-time and how to use report templates to check test coverage and traceability, check our our free TestRail Academy course on Reports & Analytics.

3. Make Adjustments Based on Analysis

It is essential to identify areas of improvement based on your data collection and analysis and make necessary adjustments to your test suite prioritization.

For example, if test coverage is low in a critical area of the application, the team should prioritize testing efforts in that area. On the other hand, if test cost/time is high, the team should identify areas where they can reduce or speed up their testing efforts without significantly increasing risk.

Adjustments that you can make based on your analysis include the following:

- If test coverage is low, consider adding more test cases to cover the missing areas.

- If the defect detection rate is low, review the test cases to ensure they find defects effectively. Generally, a higher defect detection rate is desirable as it indicates that QA engineers and testers identify and address more issues before they release the software to end users.

- If the test cost/time is high, consider optimizing the testing process or automating more tests.

- If the change failure rate is high, review the prioritization strategy to ensure you are testing high-risk areas first.

Measuring the effectiveness of a prioritized test suite is essential to ensuring that testing efforts are focused on critical areas of the application. By defining relevant metrics, tracking and analyzing testing data, and making adjustments based on those results, testing teams can improve the effectiveness of the test suite.

Conclusion

When working in an agile environment, you must test more strategically and prioritize which tests you run to keep up with the pace of releases and maximize your risk coverage. Test case prioritization helps ensure that your team executes the most critical test cases first, maximizing fault detection and risk coverage.

By employing test case prioritization techniques and algorithms, QA engineers can optimize their testing process and improve the overall quality of their software development projects.

Image: TestRail’s detailed reports compare results across multiple test runs, configurations, and milestones, help you easily record estimates and elapsed test times for accurate time tracking, and give you traceability and coverage reports to help you meet compliance requirements.

In addition to these techniques to achieve optimization, there are various tools that can help you automate your test case prioritization process. Check out TestRail as a test plan software specifically designed to make prioritizing and organizing test cases as easy as possible, draft test cases faster, and structure test cases all in one centralized place. Try TestRail’s 14-day free trial today!