A test case is a documented set of actions your team should execute to verify whether a software application’s specific feature, functionality, or requirement is working as expected.

Test cases define what you will test before you actually start to test.

How to write effective test cases

Writing good test cases is critical for a thorough and optimized software testing process. Testing teams use test cases to:

- Plan what needs to be tested and how to test it before starting testing

- Make testing more efficient by providing important details like preconditions that need to be satisfied before beginning testing and sample data to use during testing

- Measure and track test coverage

- Compare expected results with actual test outcomes to determine if the software is working as intended

- Record how your team has tested your product in the past to catch any regressions/defects or to confirm that new software updates have not introduced any unexpected issues.

Here are four common elements to consider when writing test cases:

- Identify the feature to be tested

What features of your software require testing? For example, if you want to test your website’s search functionality, you need to mark its search feature for testing.

- Identify the test scenarios

What scenarios can be tested to verify all aspects of the feature? Examples of potential test scenarios for testing your website’s login feature include:

- Testing with valid or invalid credentials

- Testing with a locked account

- Testing with expired credentials

It’s important to identify expected results for both positive and negative test scenarios. For example, for a login test scenario with valid credentials, the expected result would be a successful login and redirection to the user’s account page. The expected result for a test scenario with invalid credentials would be an error message and prevention of access to the account page.

- Identify test data

What data will you use to execute and evaluate the test for each test scenario? For example, in a login test scenario for testing with invalid credentials, the test data might include an incorrect username and password combination.

- Identify the test approach

Once you have identified the test feature, scenarios, and data for your test case, you will better understand how to approach the test for the most effective outcomes.

Here are some important considerations for this step:

- If you are testing a new feature for the first time and need to confirm specific functionality, you may want to write more detailed test steps. For example, suppose you are testing a new checkout process for an e-commerce website. In that case, you might include detailed steps such as adding items to the cart, entering shipping and billing information, and confirming the order.

- If your test case design is exploratory or user-acceptance focused, you may simply write a test charter or mission that you would like to accomplish during testing. For example, if you are conducting user acceptance testing for a new feature on a mobile app, your mission might be to ensure that the feature’s login process is easy to use and meets the user’s needs.

What to include in a test case

In agile software development, test cases are more like outlines than a list of step-by-step instructions for a single test. Test cases can include details on required conditions, dependencies, procedures, tools, and the expected output.

Seven common elements of a test case include:

- Title: The title of a test case points to the software feature being tested.

- Description (including the test scenario): The description summarizes what is being verified from the test.

- Test script (if automated): In automated testing, a test script provides a detailed description of data and actions required for each functionality test.

- Test ID: Every test case should bear a unique ID that serves as an identifier to the test case. For clarity, test case ID should follow a standard naming convention.

- Details of the test environment: The test environment is the controlled setup or infrastructure where software or systems are tested.

- Notes: This section includes any relevant comments or details about the test case that do not fit in any other section of the test case template.

Test case templates

Test case templates provide a structured and efficient way to document, manage, and execute test cases, ensuring that testing efforts are consistent, well-documented, and aligned with your business standards and requirements.

In TestRail, you can reuse your test case templates across different projects and test suites and customize them to align with specific testing methodologies and project requirements. These capabilities make it a robust and adaptable testing tool for maintaining consistency, efficiency, and organization in the testing process.

When writing test cases in TestRail, there are four default templates you can customize:

- Test Case (Text):

Image: This flexible template allows users to describe the steps testers should take to test a given case more fluidly.

- Test Case (Steps)

Image: This template allows you to add individual result statuses to each step of your test, as well as links to defects, requirements, or other external entities for each step. This distinction provides you with greater visibility, structure, and traceability.

- Exploratory Session

Image: TestRail’s Exploratory Session template uses text fields where you can define your Mission and Goals, which will guide you through your exploratory testing session.

- Behavior Driven Development

Image: This template allows you to design and execute your BDD scenarios directly within TestRail. Users can also define tests as scenarios. Scenarios in BDD typically follow the Given-When-Then (GWT) format.

Test case templates enhance consistency by providing a standardized format, making it easier to create, execute, and analyze test cases, and ensure documentation by capturing important information on test scenarios and procedures.

To learn more about how to use TestRail to build and optimize your testing processes—from test case design to test planning and execution, check out our free TestRail Academy course, Fundamentals of Testing with TestRail.

Test case writing best practices

Developing effective test cases is essential for ensuring high-quality software. Here are some best practices for writing optimized test cases:

- Write test cases based on priority

Prioritize test cases based on their importance and potential impact on the software. You can use a priority system to help identify which test cases should be written and executed first.

You can prioritize and identify higher-priority test cases with these testing techniques for test case prioritization:

- Risk-based prioritization

- History-based prioritization

- Coverage-based prioritization

- Version-based prioritization

- Cost-based prioritization

For example, suppose you are testing an e-commerce application and using risk-based prioritization to write test cases. In that case, a test case that verifies the correct calculation of sales tax may have a higher priority and risk level than a test case that verifies the color of a button.

- Make your test cases clear and easy to understand

Your test cases should be clear and easy to understand so that anyone on the testing team knows exactly what the test is intended for.

Attachments, screenshots, or recordings can be helpful in illustrating steps. For example, suppose you are testing login functionality. In that case, your test case should clearly state the steps to log in, the login credentials to use, and the expected result, such as the successful display of the user dashboard.

Ensure that test case names are easy to understand and reference. The title should indicate which project the test case is part of, and what it’s meant to do. An easy-to-follow naming convention is especially important when dealing with thousands of test cases.

When naming a test case related to reusable objects, consider including that information in the title. Information regarding preconditions, attachments, and test environment data should be documented in the test case description.

- Specify expected results

Expected results can help you ensure that you are executing your tests correctly and your software is functioning as anticipated. Specifying an expected result for your test case gives you a reference point to compare the actual results to.

For example, if you are testing the working of a shopping cart feature, the expected result for this test case would specify that the selected item has been added to the cart successfully and the cart displays the correct price.

- Write test cases for happy and unhappy paths

To achieve maximum coverage of software requirements, you must write test cases that cover as many scenarios as possible.

Happy paths refer to common paths users usually take when interacting with a software. The unhappy paths indicate scenarios where users behave unexpectedly. It is important to cover these unhappy paths to ensure the display of proper error messages that can help users navigate so they don’t unexpectedly break your software.

For example, if you are testing a search function on an e-commerce site, a happy path test case could be searching for a specific product and successfully finding it. An unhappy path test case could be searching for a product that doesn’t exist and verifying that the appropriate error message is displayed.

- Review your test cases

Regularly review and refine test cases in collaboration with the team. As the product evolves, test cases may need to be updated to reflect changes in requirements or functionality. This review is particularly crucial for products undergoing significant changes, such as new feature additions, enhancements, or modifications in requirements.

Peer reviewing can also be beneficial for identifying any gaps, inconsistencies, ambiguities, misleading steps, or errors in your test cases and ensuring that they meet the required standards.

For example, suppose an eCommerce website changes its payment gateway provider. In that case, it is essential to review your existing test cases to ensure they still cover all the necessary test scenarios and requirements, even with the change in the payment gateway provider.

The bottom line is that effectively writing and managing test cases is integral to software testing and requires careful planning, attention to detail, and clear communication. You can achieve the required level of organization and detail by implementing test case writing best practices and using a dedicated test case management tool like TestRail.

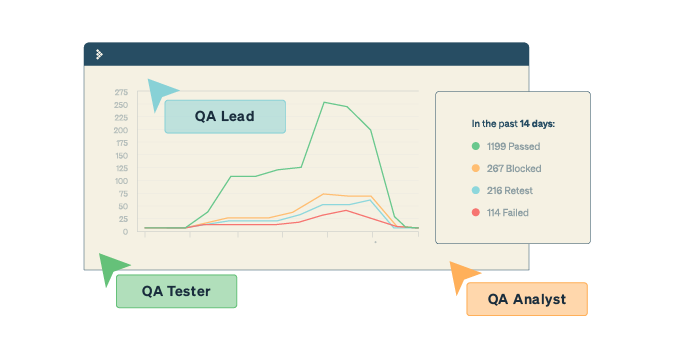

Image: TestRail’s intuitive interface makes it easy for you to write and organize your test cases by simply inputting preconditions, test instructions, expected results, priority, and effort estimates of your test case.

TestRail’s customizable and reusable test case templates also provide a pre-defined format for documenting test cases, making it easier to create, execute, and analyze tests.

This level of flexibility and visibility into your testing process makes TestRail an easy fit into any organization’s test plan — Try TestRail for free to see how it can help with your test planning.

Test Cases FAQS

Benefits of test cases

Test cases offer several benefits, aligning with the Agile methodology’s principles of flexibility, collaboration, and responsiveness to change. Here are the key benefits:

- Shared understanding: Test cases provide a clear and documented set of requirements and acceptance criteria for user stories or features. This ensures that the entire team, including developers, testers, and product owners, has a shared understanding of what needs to be tested.

- Efficiency: With predefined test cases, testing becomes more efficient. Software testers don’t have to decide what to test or how to test it each time. They can follow the established test cases, saving time and effort.

- Regression Testing: Agile development involves frequent code changes. Test cases help ensure that new code changes do not introduce regressions by providing a structured set of tests to run after each change.

- Reusability: Test cases can be reused across sprints or projects, especially if they cover common scenarios. This reusability promotes consistency and saves time when testing similar functionality.

- Traceability: Test cases create traceability between user stories, requirements, and test execution. This traceability helps ensure that all requirements are tested and provides transparency in reporting.

- Documentation: Test cases serve as test documentation for testing efforts. They capture testing scenarios, steps, and expected outcomes, making it easier to track progress and demonstrate compliance with requirements.

- Adaptability and faster feedback: Agile emphasizes early and continuous testing and teams often need to respond to changing requirements and priorities. Test cases help identify issues and defects in the early stages of the software development lifecycle and can be updated or created on the fly for quicker feedback and corrective action.

- Continuous improvement: Agile encourages a culture of continuous improvement. Test cases and test results provide valuable data for retrospectives, helping teams identify areas for enhancement in their testing processes.

- Customer satisfaction: Effective testing leads to higher software quality and better user experiences. Well-documented test cases contribute to delivering a product that meets or exceeds customer expectations.

When written and used properly, test cases can foster collaboration, accelerate testing cycles, and enhance the overall quality of software products ultimately enabling your team to deliver quality software in an iterative and customer-oriented manner.

Test cases for different software testing approaches

Different software testing approaches may require distinct types of test cases to address specific testing objectives. Here’s a breakdown of common types of test cases associated with different software testing approaches:

| Testing Approach | Types of Test Cases | Description |

| Functional Testing | Unit Test Cases | Test individual functions or methods in isolation to ensure they work as expected. |

| Integration Test Cases | Verify that different components or modules work together correctly when integrated. | |

| System Test Cases | Test the entire system or application to validate that it meets the specified functional requirements. | |

| User Acceptance Test (UAT) Cases | Involve end-users or stakeholders to ensure that the system meets their needs and expectations. | |

| Non-Functional Testing | Performance Test Cases | Measure aspects like speed, responsiveness, scalability, and stability. |

| Load Test Cases | Assess how the system performs under specific load conditions, such as concurrent users or data loads. | |

| Stress Test Cases | Push the system to its limits to identify failure points and performance bottlenecks. | |

| Security Test Cases | Evaluate the system’s security measures and vulnerabilities. | |

| Usability Test Cases | Assess the user-friendliness, intuitiveness, and overall user experience of the software. | |

| Accessibility Test Cases | Ensure that the software is usable by individuals with disabilities, complying with accessibility standards. | |

| Regression Testing | Regression Test Cases | Verify that new code changes or updates do not negatively impact existing functionalities. |

| Smoke Test Cases | Execute a subset of essential test cases to quickly assess whether the software build is stable enough for further testing. | |

| Exploratory Testing | Exploratory Test Cases | Testers explore the software without predefined scripts, identifying defects and issues based on intuition and experience. |

| Compatibility Testing | Browser Compatibility Test Cases | Test the software’s compatibility with different web browsers and versions. |

| Device Compatibility Test Cases | Assess the software’s performance on various devices (desktop, mobile, tablets) and screen sizes. | |

| Integration Testing | Top-Down Test Cases | Begin testing from the top level of the application’s hierarchy and gradually integrate lower-level components. |

| Bottom-Up Test Cases | Start testing from the lower-level components and integrate them into higher-level modules. | |

| Acceptance Testing | Alpha Testing Cases | Conducted by the internal development team or a specialized testing team within the organization. |

| Beta Testing Cases | Involves external users or a select group of customers to test the software in real-world scenarios. | |

| Load and Performance Testing | Load Test Cases | Simulate a specified number of concurrent users or transactions to assess the software’s performance under typical load conditions. |

| Usability Test Cases | Evaluate touch gestures, screen transitions, and overall user experience. |

Common mistakes to avoid when writing test cases

Writing effective test cases is crucial for successful software testing. To ensure your test cases are useful and efficient, it’s important to avoid common mistakes. Here are some of the most common mistakes to watch out for:

- Unclear objectives: Ensure that each test case has a clear and specific objective, outlining what you are trying to test and achieve.

- Incomplete test coverage: Don’t miss critical scenarios. Ensure your test cases cover a wide range of inputs, conditions, and edge cases.

- Overly complex test cases: Keep test cases simple, focusing on testing one specific aspect or scenario to maintain clarity.

- Lack of independence: Avoid dependencies between test cases, as they can make it challenging to isolate and identify issues.

- Poorly defined preconditions: Clearly specify preconditions that must be met before executing a test case to ensure consistent results.

- Assuming prior knowledge: Write test cases in a way that is understandable to anyone, even new team members unfamiliar with the system.

- Ignoring negative scenarios: Test not only positive cases but also negative scenarios, including invalid inputs and error handling