This is a guest posting by Bob Reselman

Kubernetes is fast becoming the go-to open-source technology for container orchestration at the enterprise level. It not only allows IT departments to deploy containers at scale automatically but also ensures that those containers will run reliably in a fault-tolerant manner.

Kubernetes, also referred to using the abbreviation k8s, was created by Google and introduced to the public in 2015. It was turned over to the Cloud Native Computing Foundation (CNCF) in 2018 as a fully open-source project. There’s a lot of demand for expertise in Kubernetes, so much so that the CNCF now offers certification for Kubernetes Administrator. Eventually, Kubernetes will affect just about everyone involved in the software development lifecycle — developers, operations personnel and test practitioners. It’s that cool.

But if you’re uninitiated, you might be wondering why Kubernetes is such a big deal. So let’s take a look at the details.

What Makes Kubernetes Cool?

Kubernetes solves a fundamental problem in distributed computing: how to keep large-scale, containerized applications that are subject to continuous change, both in terms of features and resource requirements, running reliably on the internet. Kubernetes solves the problem by using the concept of a predefined state to create, run and maintain container-driven applications over a cluster of physical or virtual machines.

Deployment engineers create the application (or service) state and submit it to Kubernetes. Kubernetes then creates, deploys, and maintains the application forever, or until the application is taken offline.

Let’s take a look at an example.

Imagine we have a cloud service that has a feature that puts a red border around any photo submitted to it. (See figure 1.)

Figure 1: A simple cloud service that modifies a photo

In addition, the service will save a copy of its work should users need one later on. This service is intended to support 10,000 requests a minute, which is pretty heavy demand — more than one computing instance can handle. Thus, we’ll need to have many computing instances, each running a copy of the photo processor. (Typically in a situation where there is redundant computing in force, a load balancer is used to route a request to an available instance among many.)

The application designer puts the photo processor logic into a Docker image and stores the container image in a public repository, such as Docker Hub. Eventually, the image will be deployed as an isolated container on a virtual machine.

Now it’s time to do a traditional deployment. Once the container image has been created, the deployment engineer needs to create a cluster of virtual machines, deploy a container on each machine, and then get all the networking and load balancing coordinated. Even using automation, there is a good deal of work that can be time-consuming and error-prone.

Let’s take another approach that uses Kubernetes.

Instead of having a deployment engineer go through the time-consuming task of creating the containers, deploy them to virtual machines and do the network configuration required to make the service available to public consumers, the engineer uses Kubernetes. She provisions a Kubernetes cluster on the cloud or in a data center, or she can use an existing Kubernetes cluster. Once a cluster is identified, the engineer creates a set of manifests (which are YAML files) that describe the various aspects of the application. The descriptions include the number of containers that need to be running at any given time, the location of the storage resource that will keep a copy of the augmented photo, and routing configuration that defines how the requests to the photo processing service will be accessed.

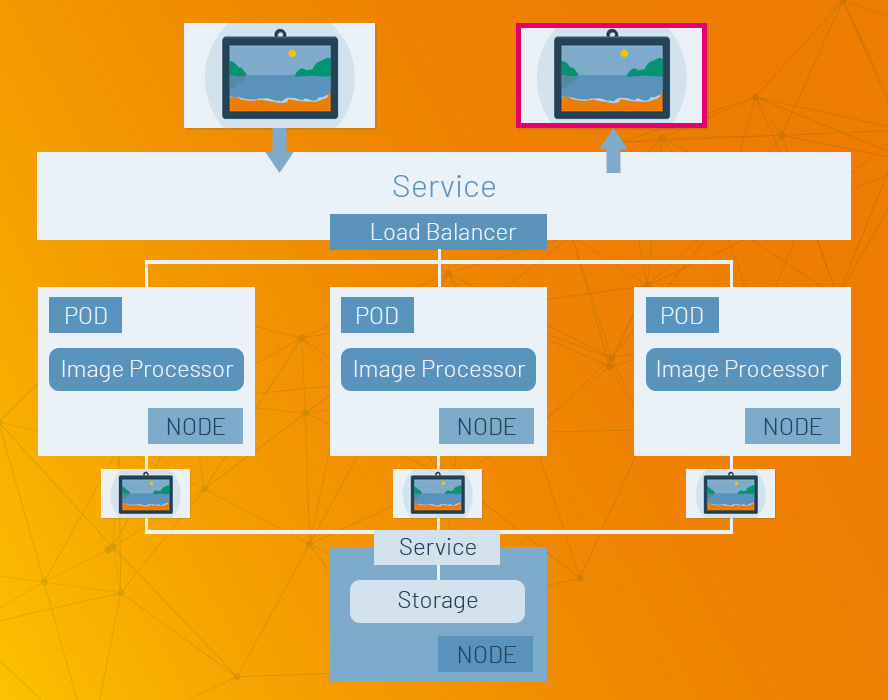

Included in the set of manifests is one that defines the structure of the pod that will host the photo processor container.

These manifests define the state of the application. The deployment engineer submits the manifests to Kubernetes, and Kubernetes find the nodes (aka virtual machines) in the cluster that have the capacity to host the required number of pods.

Then Kubernetes creates the pods with their containers using container images that are downloaded from an image repository, such as Docker Hub. Kubernetes will create the number of pods required according to the deployment manifest. Then it injects the pods into the identified nodes. Kubernetes uses information in the service manifest to create the Kubernetes service behind which the pods run. Finally, Kubernetes will expose the service to the public according to the description provided in the service manifest. (See figure 2.) Once the service is exposed to the public, the application is operational.

Figure 2: A basic Kubernetes deployment runs application logic in a number of pods hosting containerized code that run behind a Kubernetes service

Now comes the really cool part. Once the application is deployed, Kubernetes guarantees that the state of the application will be maintained in the event of any internal failures. If a node goes offline, Kubernetes will move the pods from the corrupt node to another healthy node. If a pod fails on its own, it will be replenished. If for some reason the entire cluster blows up, all that’s required is to reapply the manifests to the new cluster and the application will be resurrected in its original state. Also, any container in a pod can be modified by Kubernetes using a rolling update across the cluster. If things don’t work out with the update, Kubernetes allows rollback.

As you can see, Kubernetes provides a high degree of automation out of the box. All that’s needed is to define the state of the application and let Kubernetes do the rest.

However, just because the overall idea that drives Kubernetes is simple, working with the technology is not that easy. Kubernetes does take time to master. Creating manifests requires knowing a lot about the particulars of Kubernetes. Networks and access configuration have a good many “gotchas.” And there are some significant security concerns that need to be addressed. Some development processes in a company’s software development lifecycle also might need to be modified to take full advantage of Kubernetes, including the way performance testing is approached.

Still, once an IT department gets up and running on Kubernetes, the technology provides a high degree of flexibility and resiliency — and the labor savings are considerable. But, again, you need to know what you are doing.

What Is the Impact of Kubernetes on Performance Testing?

Probably the biggest change test practitioners will have to make in the way they approach performance testing is to accommodate the distributed and ephemeral nature of applications running under Kubernetes. Remember, Kubernetes guarantees state, but where and when that state is realized is, for the most part, at the discretion of Kubernetes. Unless configured otherwise, the location of an application pod can change at any time within the Kubernetes cluster. (Kubernetes does have a mechanism called pod affinity that makes it so a particular pod can be assigned to a particular node, but this is not the default out of the box.)

While measuring performance outside of the cluster — for example, measuring request and response time against a public URL — is still a pretty straightforward undertaking, understanding what is going on inside the cluster is more complex. In order to get a detailed understanding of internal performance, testers will need to rely on system monitors, logs, and distributed tracing tools to gather data. Aggregating runtime data at test time, across nodes and containers that are running in the cluster, is a common technique for determining performance behavior.

Thus, performance testers need to have an operational facility with basic monitoring tools such as Heapster, Prometheus, Grafana, InfluxDB and CAdvisor. These are the tools that keep an eye on the cluster and report ongoing operational behavior, as well as dangerous situations. Being able to work with these tools and the information they provide is essential for test practitioners. Otherwise, they’re flying blind. In fact, as the more machine-to-machine activity takes place on the internet, rather than work being done between a human and computer, machine monitoring throughout the cluster is going to become the primary way to determine the performance of systems under test.

As Kubernetes becomes more integrated into a broader range of companies, performance testing will go from simply exercising URLs to invoking a variety of activities against a cluster of machines operating ephemerally, and then analyzing many different types of results that are external and internal to the cluster. It’s a fundamental shift in the way performance testing is approached.

Putting It All Together

Given its wide range of adoption, we can expect Kubernetes to continue to have an impact throughout the IT landscape for a long time to come. And test practitioners will not be immune to its influence. In fact, there’s a good case to be made that as Kubernetes continues to proliferate through IT, test practitioners will need to re-examine their approach to testing applications that run under the technology.

Working with Kubernetes requires a different approach to distributed computing. Understanding the basics of Kubernetes is important for anyone working around the technology today, test personnel included. Being able to adopt performance testing practices that are compatible with Kubernetes and continue to meet the needs of the organization will be a challenge for many an IT department. But, given the power and cost savings that Kubernetes brings to a company’s technical infrastructure, it’s a challenge worth meeting.