This is a guest posting by Bob Reselman

There’s a common misperception that a Linux container is nothing more than a lightweight virtual machine (VM). But that’s not the case. While Linux containers seem to have many of the features that are also present in a VM, they are architecturally different. This difference is worth knowing about for developers and test practitioners.

Let’s look at how containers and VMs differ and then discuss the benefits and hazards of each.

Containers are tightly bound to the Linux file system

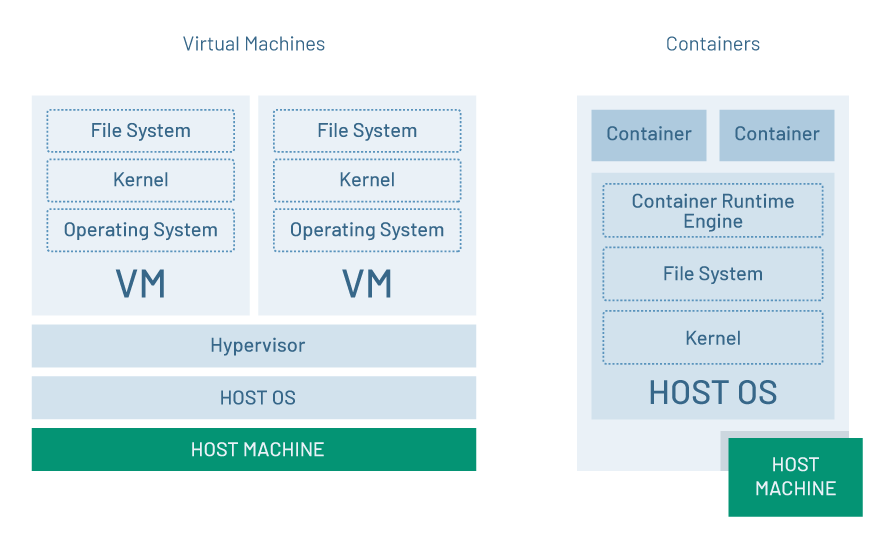

The essential difference between a container and a VM is that the VM has a kernel that is separate from the host machine, while a container uses the kernel of the host machine. (See figure 1.)

The kernel is the lowest level of operating system software that interfaces with the computer. The kernel is responsible for intermediating between application executables running on the computer and the computer itself. It interacts with the machine to allow processes, known as services and servers, to get information from each other using inter-process communication (IPC).

The kernel handles disc I/O, network traffic and storage. For all intents and purposes, the kernel runs the machine.

Figure 1: A virtual machine is a distinct computer with its own virtual kernel, while a container uses the actual kernel of the host system.

Having a distinct kernel versus using a host’s kernel is important because it allows the VM to run an operating system that is different from the host’s operating system. A Linux host system can support a VM that’s running another operating system, such as Windows, but containers running on a Linux host must run Linux.

Also, while a VM will have its own virtual file system separate from the host machine, a container uses the file system of the host machine.

So, the question you might be asking is, how can a container appear to be an isolated computing unit like a VM if it uses the host’s kernel and file system? The answer is that containers use three basic isolation mechanisms that are part of the Linux operating system.

The first mechanism is a namespace. A namespace is a Linux isolation feature that makes it so that access and activity on a computer can be confined accordingly. For example, you create a namespace for a particular user and then assign that namespace unique access to a computer resource, such as a process. Thus, it’s possible to have a number of processes running on a computer with certain processes assigned to one namespace and another set of processes assigned to a different namespace. The processes in the first namespace will have no intrinsic awareness of the processes in the other namespace.

The second mechanism is cgroups. A cgroup, which is an abbreviation for control group, is a Linux feature that puts constraints on the Linux resources. For example, you can make a cgroup that limits the percentage of a CPU a process can use.

The third mechanism is the union file system. This service makes it so that a container’s file system appears to be an isolated resource, while the reality is that the container is sharing the host’s file system. This is particularly relevant to the concept of container layers that represent executables within the container — MySQL, for example.

While it may appear that multiple containers are running an application under a unique file system, behind the scenes there is a base set of files for the application, as well as a set of overlay files that are unique to the container. These overlay files are called the write layer of the container. The result is that whenever an application in the container appears to be doing a write to the parts of the file system controlled by the root user, it is really writing to a writable emulation of the host operating system’s file system that is special to the container.

Put these three features together and you get a container. All the work that goes into coordinating container creation, operation and management is done by a container runtime engine, which is a service that runs on the host machine.

The most popular container runtime engine is dockerd, but others include rkt and containerd.

for QA and Development Teams

Benefits and hazards

To make a long story short, a virtual machine is a complete software representation of a computer running on a physical host. You can have many VMs running on a single host. Each VM will have its own virtual kernel, and thus appear to have its own file system and CPU as well as its own network and disk devices.

A container is an isolated unit that behaves similarly to a VM but uses the host’s kernel as well as other system resources of the host. VMs appear to be distinct from the host, while containers run on top of the host.

The essential benefit of a container over a virtual machine is that a container will load into memory very fast — on the order of milliseconds when proper preparations are made. It takes much longer to load a VM onto a host. The fast load time makes containers the deployment unit of choice for distributed applications that need to scale instances on-demand at near-instantaneous speeds. This is why we see a proliferation of container orchestration technologies such as Kubernetes and Docker Swarm.

The overriding risk of containers is that they run on top of the host and have access to the host’s kernel. A container running with root privileges can wreak havoc on the host machine. This is a real threat, whereas running as root on a VM can cause trouble for the VM itself, but it’s rare for that sort of malfeasance to make its way into the host machine. Virtual machine hypervisor technology does a good job of standing in the way.

Another thing to think about in terms of container technology is that there’s a lot more to know in terms of having containers work together and share data. For example, you need to know a lot about Docker in order to have a Docker container that hosts an application that uses Docker. Here’s an example I made of a container that runs a Jenkins server that, in turn, uses the host’s instance of Docker to create a container as part of the CI/CD process.

While it’s true that container orchestration technology takes over a good deal of managing container-to-container interaction, it’s a “pay me now or pay me later” type of thing. At some point, you have to know a lot about a container just to get an application up and running beyond a simple Hello World.

With a VM, once you get it going, there’s not much more to learn other than what you needed to know to get your application up and running on a desktop system. The downside is that the reduced learning curve comes with a price, and that price is speed.

Putting it all together

There’s a lot to be said about container technology. You get all the benefits of isolation provided by virtual machines with increased speed at load time. But there are tradeoffs. With a virtual machine, it’s pretty much business as usual once the VM is up and running. Containers are a more complex technology for the developer and test practitioner.

Also, containers present significant security risks unless precautions are taken. But, given that containers are so prevalent in the technology landscape these days, implementing best practices for container security requires little more than an hour searching the internet for information and a willingness to then do the right thing. In fact, there’s a whole industry that’s emerged around container security.

Regardless of which technology you embrace — virtual machines, containers or both — the important thing to understand is that although both technologies enable independent and isolated computing at scale, they are not the same. A container is not a lightweight virtual machine and vice versa. They are different in very distinct ways, and knowing the difference is important.

Cross-Technology | Cross-Device | Cross-Platform

Article by Bob Reselman; nationally-known software developer, system architect, industry analyst, and technical writer/journalist. Bob has written many books on computer programming and dozens of articles about topics related to software development technologies and techniques, as well as the culture of software development. Bob is a former Principal Consultant for Cap Gemini and Platform Architect for the computer manufacturer, Gateway. Bob lives in Los Angeles. In addition to his software development and testing activities, Bob is in the process of writing a book about the impact of automation on human employment. He lives in Los Angeles and can be reached on LinkedIn at www.linkedin.com/in/bobreselman.