The automated tests created as part of the behavior-driven development (BDD) process provide us with numerous benefits:

- They provide a test suite that lets us know if we have any regressions.

- They give us time to do needed exploratory testing.

- They document the capabilities of the system—if the tests all pass, we know we can trust them as documentation.

It would seem that, with these potential benefits, having more automated tests is always better—but that’s not necessarily the case.

Get TestRail FREE for 30 days!

When to Question the Status Quo

An organization I worked with proudly told me they’d produced at least 3,500 automated acceptance tests. These tests required several hundred hours to run: The tests drove their UI and took, on average, six minutes per test. Do the math. No, wait, don’t do the math; you’ll be appalled.

I asked about the size of the system. What did it have to do? Roughly how many screens were involved? Was there any hidden complexity, such as a massive number of business rules hidden beneath the surface (what I call an “iceberg system”)? Their answer suggested a medium-sized system—at most, a couple hundred thousand lines of C# code. Specs-by-example tests in comparable systems I’d encountered typically numbered around 300 or 400, taking anywhere from a few minutes to a few hours to execute.

Clever scaling across a couple dozen machines allowed my customer’s many thousands of tests to complete in about 11 hours each night. They’d additionally built some measures to attempt reruns of tests that might have spuriously failed, and to do other clever monitoring. The upshot: only a few person-hours expended per morning to wade through the inevitable dozen or so failing tests. (And yes, the word “only” in the preceding sentence is intentionally sarcastic.)

We sometimes are great at emulating frogs in a pot of water slowly coming to a boil. I can imagine hearing these statements uttered at some point during the evolution of this amazingly oversized test suite:

“The test run is taking an hour to run. It’d be nice if we could see our builds complete a few more times per day so that we could correct any problems sooner.”

“It’s taking several hours for the test run to complete. We only get feedback on things we push up in the morning.”

“It’s taking most of the night to run! It’s barely finishing by the time we get in. And sometimes it fails completely early on, and we get zero feedback!”

“It doesn’t even finish overnight. We need to scale to two machines.”

“We need to find a server farm and pay for an additional sysadmin.” (This was just a little before the rise of AWS.)

At some point, it would have been nice if someone had dared to say, “Maybe we’re doing something wrong.”

The next time things seem suspect, stop and say, “Maybe we’re doing something wrong.” Not much is black-and-white wrong in software development, but it’s certainly wrong to let a frog slowly boil to death.

Driving the UI: A Slow Highway to Hell

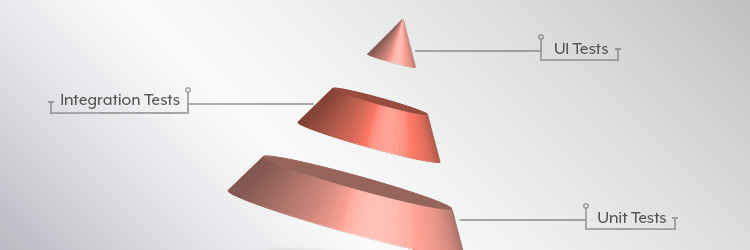

A brief look at most versions of the testing pyramid suggests that, out of all the types of automated test, UI-based tests should constitute the fewest. Why is that?

As suggested above, things take much longer when driving the user interface. Instead, we should test most of our voluminous bits of logic using fast-feedback unit tests. If the business needs visibility to these permutations and combinations, we can expose them via BDD tests that interact instead with an API or even with a function directly. Save the automated UI tests for verifying end-to-end concerns: Does the application flow properly? Are things wired properly end to end?

User interface tests are brittle and unpredictable. They break easily as things change in the UI, which usually is a frequent occurrence. They fail spuriously due to odd timing or timeout issues; every such failing test wastes our time in ascertaining whether or not there’s a real problem.

When our UI design changes but the system’s behavior does not, we incur additional effort to keep the tests running. Either we must update the “glue” code, if we’ve written the tests declaratively (like we should), or we must update the tests themselves, if our tests are imperative.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

Thousands of Tests—Really?

My customer sought to deliver (internally, at least) new functionality at the completion of each two-week iteration. Their stories effectively represented units of work: “Add a field on the policy screen to capture the customer’s pet’s email address.” To verify that the stories were completed each iteration, the test folk insisted that new scenarios were added for each story: “Verify that the policy screen contains the pet’s email address.” In other words, the test executed via the scenario required navigating through the application and asserting that the email address could be found on a certain screen. Never mind that there might already have been a scenario that verified information saved with a policy.

One story, one-plus test: This is a recipe for a messy test explosion. This is how you get to ten times the tests you really need. That they happened to also slowly drive the UI only made things another order of magnitude worse.

Your features and scenarios must be groomed carefully over time, even more so than your backlog. If you think of each test as a specification by example, you start to think of the collection of tests as comprehensive documentation on what your system does. And the more you seek to use it as documentation, the more likely you’ll organize it well, keep it clean, and keep it meaningful.

How to best organize your tests is a topic for another post. For now, the best thing you can consider is that each scenario should describe how a user accomplishes an end goal in your system. Viewing a field on a screen might be an occasional end goal. More often, populating that field is a necessary element toward accomplishing some other interesting end goal.

A story represents some unit of work you accomplish. Not every story represents a new end goal. You should frequently be updating scenarios, not always adding new ones.

Article written by Jeff Langr. Jeff has spent more than half a 35-year career successfully building and delivering software using agile methods and techniques. He’s also helped countless other development teams do the same by coaching and training through his company, Langr Software Solutions, Inc.

In addition to being a contributor to Uncle Bob’s book Clean Code, Jeff is the author of five books on software development:

- Modern C++ Programming With Test-Driven Development

- Pragmatic Unit Testing

- Agile in a Flash (with Tim Ottinger)

- Agile Java

- Essential Java Style

- He is also on the technical advisory board for the Pragmatic Bookshelf.

Jeff resides in Colorado Springs, Colorado, US.

Test Automation – Anywhere, Anytime