What is the value of QA metrics? Is there any point in expending effort to measure, analyze, review and then act upon the results? The type and need for QA metrics has evolved with the popularity and widespread adoption of the Agile software development methodology. QA metrics once measured the number of tests executed and tests passed or failed but these metrics no longer render you significant value for improving the quality of either your QA testing or the software quality of a release.

The top QA metrics provide real business value and stimulate changes that improve the customer experience by improving the quality of software application releases. Sounds easy enough, measure a few things, review the results, and use the results to continuously improve your test and application quality.

The problem with QA metrics is they are difficult to quantify because they are largely subjective. Additionally, measuring QA metrics is resource intensive. However, in order to gain an idea of how effective your QA testing and development teams processes are, metrics are important. After all, it’s impossible to improve without a measure of where you started. Agile teams practice continuous improvement. Without measuring QA and development team metrics, teams don’t have a baseline to work from to improve.

The adage applies to every software development organization and team. If issues revealed by QA metrics are continuously ignored, improvement in application quality doesn’t happen. Ignoring issues does not make them go away. The best QA metrics won’t improve software quality unless there is action. Measurement and action provide business value for the organization and the software development team.

This guide provides a list of the top 5 QA metrics that provide valuable metric data that can improve the software application’s quality continuously.

QA Metric #1 – User Sentiment

The number one metric for measuring application quality is user sentiment or the quality of the user experience with the application. Capture and analyze customer feedback during customer support calls, surveys, and conversations with product managers or others working directly with customers. Understanding how customers use the new features or enhancements may also indicate other areas of improvement to create in future sprint work. Develop a plan to deliver on the customer’s needs and keep the application useful for supporting user workflow needs.

The user sentiment metric adds value across the business by effectively measuring the perceived application quality, usability, stability, and level of brand value. You can measure user sentiment using customer interviews, online or live surveys, or using customer input testing sessions. Customer input sessions bring customers in and has them provide input directly about the intuitiveness and quality of the user experience.

QA Metric #2 – Defects Found in Production

The defects reported in production after a release is the number 2 QA metric that provides valuable metric data that can improve the software application’s quality continuously. Often called defect escapes or defect leaks, the metric is critical to understanding how defects end up in a release on production. Analyzing the defects found in production indicates gaps in one or more of the following team or organization functions:

- Requirement changes or scope creep

- Undocumented features added or removed within related stories

- New features added without documented stories or requirements

- Lack of test case depth or breadth in specific areas of the application

- Ineffective or non-coverage of backend processing or integrated connectivity functions

- Imbalance of testing speed and test coverage

- Test environment to production environment infrastructure differences

No one wants hotfixes. Hotfixes to production are stressful, time-sensitive, and often rushed. Hotfixes tend to cause additional defects as well, and after test execution on a release is completed. Unfortunately, hotfixes are necessary to retain and deliver higher-quality customer experiences. Customers are essential for businesses to remain active and successful. Without customers wanting to use the application, there is no business and no software development team.

Defects found in production are literally measured by counting the number of customer or support team documented defects. Another measure is counting the number of hotfixes or patch releases created after a release.

QA Metric #3 – Test Case Coverage

Number three on the top 5 QA metrics list is Test Case Coverage. Measuring test case coverage means analyzing the quality and content of the test cases being used in testing. Measuring test coverage ensures all stories, requirements, and acceptance criteria are covered in one or more test cases. Many software organizations make the mistake of equating test coverage with the number of tests executed. The number of tests executed is only useful for marketing purposes; it does nothing to indicate the validity of the tests or coverage against requirements.

Measuring the quality, depth, and breadth of test coverage involves reviewing test cases and mapping them to user stories and acceptance criteria. For example, take your test case and use the test management tool to link to the requirement or user story. Typically, test management tools support a direct link to a story ID or requirement ID or document. If not, you can choose to map manually by adding the story ID or requirement number to the description, summary, or steps to reproduce. Manually linking is more difficult to track, but provides the requirement connection to compare test case coverage to user stories or requirements and ensure all features are probably covered with valid test cases.

Review test cases and user stories to ensure all stories and acceptance criteria are covered accurately in test cases. When traceability exists, teams ensure accurate test coverage exists for all functionality. However, without valid test cases, defects may go unnoticed.

QA Metric #4 – Defects Across Sprints

QA metrics number 4 and 5 on this list are related. Measuring defects across sprints comes in at number 4 because it measures defect longevity through the development lifecycle. The longer a defect remains in the pre-release code, the more likely the defect will end up in the final production release.

Defect slippage across sprints is generally caused by overcommitting on stories or not delivering all the committed stories during a sprint. Moving stories to done, must include both development and all testing including unit, functional, and integration testing. When stories are not fully tested due to time limits at the end of a sprint, defects linger. When defects are not corrected until future sprints, they tend to grow in complexity and range.

In order to measure the defects between sprints, document the defect ID of defect stories that are moved from sprint to sprint. Then, combine the defects that are rolling over sprints and add the feature stories that are also rolling from sprint to sprint. Compare them and determine if they are the same or different symptoms sharing a root cause. If the same defects show up in production or the numbers continually increase, then address defect slippage as part of Agile and the continuous improvement process.

QA Metric #5 – Committed vs. Delivered Stories

As mentioned earlier, QA metrics 4 and 5 are similar. The number of defects occurring across sprints ranks higher based on the tendency for those same defects to show up later in production. Measuring committed versus delivered stories applies to any story type, including feature enhancements and defects or defect fixes.

Measuring committed and delivered stories provides QA teams with information on gaps in code or functionality that exist when stories are related but not fully delivered. Integration testing may find defects between the two stories when they are dependent or related and not fully delivered at the same time.

In order to measure the committed vs. delivered stories, track the development team’s progress for all stories. Note which stories are committed and not delivered at the sprint end and compare them to the stories delivered. Are the stories dependent on each other or related to each other? If they are, there may be defects present where code is missing to support each story if one is not delivered.

Measuring the number and type of stories that slip or slide from sprint to sprint indicates a planning issue and a story development problem. If the number of stories rolling over to future sprints increases continuously, the risk becomes higher for features or functionality to go missing in a release.

Getting Real Value From QA Metrics

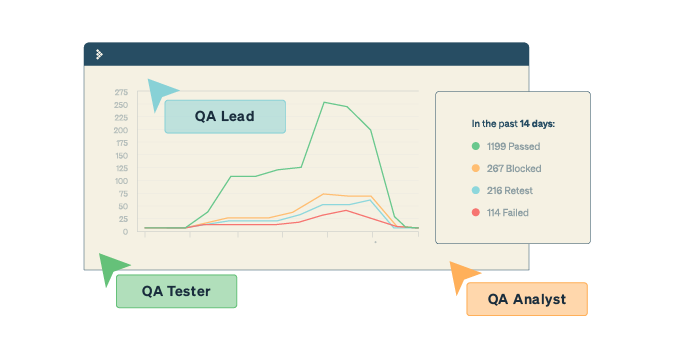

Before heading off to measure these top five QA metrics to improve testing and the quality of an application, clarify the goal of the QA team. In an agile team, application quality is the responsibility of the whole team, not solely the QA tester. QA testing’s goal is not to find all the defects within a release simply because it’s not possible in most software development timeframes. The purpose of improving QA testing is to provide assurance that the application meets the organization’s defined standard of sufficient application quality.

The inherent quality of QA testing is a balance between speed and value. Suppose QA metrics indicate that a team has speed but lower quality, then the balance is off. Speed is necessary to keep the application and business relevant as is application quality.

Using QA metrics and measuring user sentiment, production defects, test case coverage, defects across sprints, and the number of stories the team commits to but fails to deliver, gives the business a valuable overall picture of where the balance between application quality and speed lies. Measure, analyze, and review to improve application quality and act on continuous improvement for best results.