This is a guest post by Justin Rohrman

It is easy for testing work to be invisible or illegible. Most of it happens in your head, and sometimes when the software is pretty good to begin with, there is no evidence that testing actually happened.

One of the things I am pretty regularly asked to do is review a testing practice. I usually find that the testers are there, they are working hard, and they care about their work. The problem is that their work is completely hidden. No one can see it, and because of that, no one understands it or how it might be improved.

So, how can you make your testing work visible to your team?

Scrum Standups

Most of the daily scrums I have been part of were difficult for testers. We went around the room, starting with developers and product people. If testers were called on at all for a status update, it was at the very end, and most people were distracted or uninterested by that point. A discussion would sometimes start before the testers said their bit, and we’d run out of time.

When one of the testers did get called on, they would say something like, “I’m testing the social media sharing card.” If they were working on multiple projects, the status would be even less specific, and they’d just say which project they were planning to work on for the day.

No one left the scrum knowing any more about the testing effort than they did when they walked in.

Just like software development, testing is multifaceted. We aren’t just developing or testing — there are a lot of activities that happen in the context of testing. The daily scrum is a good time to highlight some of those activities.

The team transitioned from being very general to actually talking about the work. One person might be working on building test data, one person might be working on reproducing something that seems like a race condition and could use some help, and another might just need an hour or two more before they move on to the next change.

The daily standup is the appropriate time to share this level of detail.

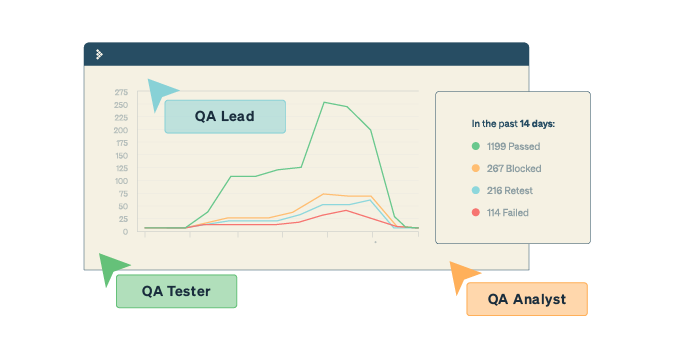

Test Coverage

Wanting to know how much of a product or feature is tested is a very reasonable thing. Developers want to anticipate any bug-fixing they might need to do. Managers need to understand how the release is coming along. And product people need to be able to explain when a customer might see a much-needed feature or fix in production. However, these are also questions most testers have a hard time answering, and it undermines their value.

One of the best ways to talk about coverage is in terms of inventories. A software product can be described from different perspectives — configurations, pages, features, text fields, supported browsers, databases and so on. One way to talk coverage is to keep an inventory of each of these things. When someone asks about test coverage at a high level, you can tell them that you have tested 15 of the 45 documented features on two of your supported browsers. You can also talk about what is remaining and what is out of scope.

At the feature level, I find that it helps to keep a smaller inventory of test ideas. This doesn’t have to be anything more than a text document or a mind map, and it doesn’t have to be pages of documentation. Just a simple reference will work. This can serve as a way to organize your test ideas, as well as a tool for describing how far along you are with testing a change and how much more time you might need.

Being able to talk about coverage removes some of the mystery from your work. It also shows that you’ve been paying attention.

Cross-Team Collaboration

The previous two ideas are focused on communication. But for the most part, testing happens in your head: You have to think about the system in front of you, imagine ways it might fail, and then put those ideas into action with the mouse and keyboard. After a while, tests bleed into each other and it is hard to tell where one starts and another ends. The only interaction most technical team members have with what a tester does is through bug reports. If the software is pretty good, there may be nothing to show aside from time spent.

One way to show other team members what testing means is to invite them into your work. I like to do this by pairing with developers who are willing. At a previous job, we paired about 90% of the time. Developers and testers would pick a change from the backlog and review it with a product manager. The pair worked together to build the change and design automated tests at whatever level was applicable — unit, service or browser. And at each point where it made sense to build and deploy a new container, they worked together on exploration.

I like this flow because it is efficient, and when the pair is done the change is ready to go to production. But it also removes the mystery from testing. Unit tests are designed and built together, exploration happens together, and at the end of the change, test coverage is reviewed to see if anything is missing. This is visible testing.

Becoming Visible

The first step in making your work more visible is to talk about what you do, so your daily standup might be a good place to do this. Rather than glossing over the details of your work, pick out a few that people might be interested in: risks you are exploring, technical tasks you have to perform, developers you want to talk with. After that, pick specific points about your work you want to share — test coverage, test design, planning — and find a relevant audience.

This is a guest posting by Justin Rohrman. Justin has been a professional software tester in various capacities since 2005. In his current role, Justin is a consulting software tester and writer working with Excelon Development. Outside of work, he is currently serving on the Association For Software Testing Board of Directors as President helping to facilitate and develop various projects.