This is a guest post by Matthew Heusser.

The goal of “traditional” testing was to make the work stable, predictable, and repeatable. If the work was defined well, it could be done by lower-skilled workers, measured, and predicted. That generally involved defining the test cases and test steps upfront.

Defining the work restricts the tester’s ability to go off-script, explore and learn while doing the work. Yet many of the best test ideas occur when one is personally engaged with the software, doing testing.

Cem Kaner, lead author of “Testing Computer Software,” pointed out that all testing has some off-script element. Even if the steps are documented, the tester will likely find bugs, and finding the bug requires reproduction and documentation. When the tester returns to the official plan, they are unlikely to return to the exact place they jumped off. And even if the tester does return to the same place, the software will be in a different state due to the bug reproduction steps.

Instead of ignoring this fuzzy reality, exploratory testing turns it into a competitive advantage, freeing the software tester to pursue risks as they emerge in the moment. Yet once you set the tester free, how do you know the right pieces of the system have been tested? How do you manage testing?

This article is designed to address these questions.

What is exploratory testing?

When Kaner coined the term “exploratory testing” in 1988, his thinking was in terms of quick attacks, or “guerrilla raids.” Skilled testers who had learned a broad set of ways to overwhelm software could jump in and find bugs immediately, even without knowing the business rules. Later definitions focused on the ability of the tester to design tests in real-time, execute them, and use the results to adapt the plan.

In 2006, James Bach and Michael Bolton modified Kaner’s interpretation and defined exploratory testing in this way:

An approach to software testing that emphasizes the personal freedom and responsibility of each tester to continually optimize the value of his work by treating learning, test design, and test execution as mutually supportive activities that run in parallel throughout the project

This approach hits the high notes: It puts the tester in the driver’s seat to determine what they will test in real-time. It emphasizes that the map is not the territory, that the tester may find something as they work that is a lead to pursue to find new bugs, and that the tester switches between activities as they work.

What the definition does not do is provide any high-level view of what is happening in the software system.

If the software is small and trust is high, that might be enough. A highly skilled tester can “just test” a clock widget for an operating system or a simple data export that produces a text file. The problem comes when the software is complex or large and when there are multiple team members exploring the software and you want to minimize overlap. In these situations, having a list of what to do and working the list seems pretty convenient. Yet exploratory approaches tend to eschew upfront planning while viewing a changing plan as a competitive advantage.

Techniques have evolved to address this problem, including the testing dashboard, charters, and thread-based testing.

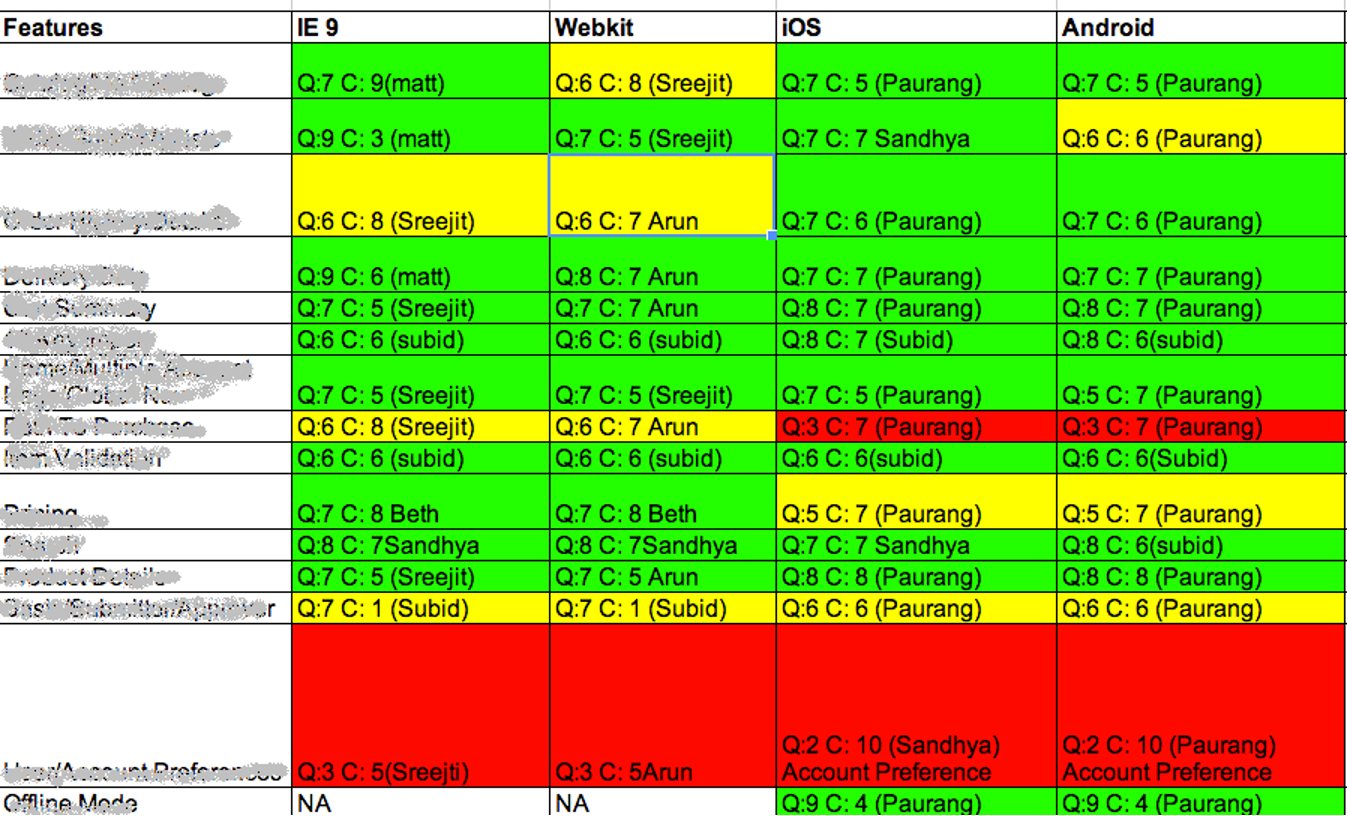

image: Exploratory testing dashboards. Source: Live project data, Excelon Development, used with permission. www.xndev.com

A feature tour of the application is a good thing, but it tends to ignore the customer journey, emergent risk, and the reality of new builds with changes. Teams that use the spreadsheet approach tend to make a new spreadsheet for every major build, perhaps blocking sections off as untested because “those features shouldn’t have changed.” This sort of testing dashboard also does not provide metrics or predictability.

The easiest way to visualize the status of exploratory testing might just be to create a list on a whiteboard of features, with a smiley, neutral or sad face for quality and a score or color for how well the feature was covered.

James Bach’s low-tech testing dashboard, Google Docs, and other collaborative spreadsheets make this even easier, allowing anyone to view the dashboard in real-time. The example above is from a real Excelon Development customer; the columns are browsers and mobile devices.

Charters, sessions, and threads

The next major advancement in managing exploratory testing was session-based test management. Pioneered at Hewlett-Packard, a session is a stretch of focused testing activity around a charter, which is a mission for how to invest the time. The initial sessions at HP were 90 minutes in length; it is more common to see 30-minute sessions today.

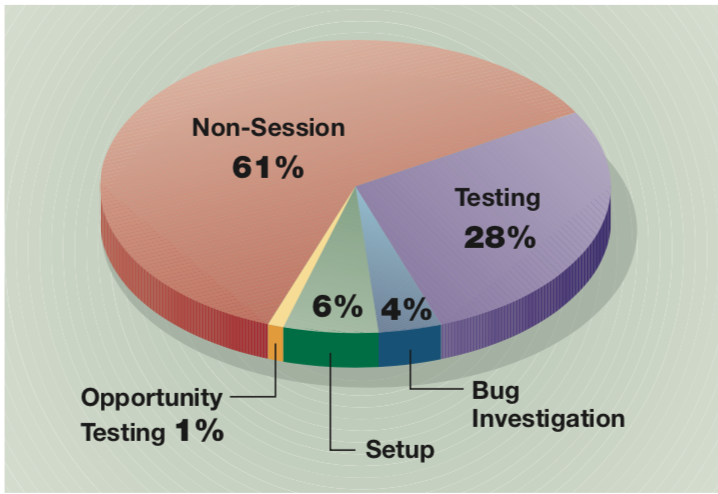

Keeping the sessions the same length allows the team to create performance metrics. Framed this way, any team member can do testing (not just “testers”). The person running a session takes notes, does a debrief after with a lead or manager, and records roughly the amount of time spent on bugs vs. testing vs. setup, or BTS time.

Two major metrics for session-based testing are the number of sessions accomplished and the BTS time as a pie chart. A simple spreadsheet can also calculate the administrative time, or time spent not on sessions.

Source: Session-Based Test Management, Software Quality Engineering Magazine, Jonathan Bach

Exploratory testing charters and missions

Charters are designed to minimize a particular risk through oversight. A charter for a social media application might, for example, be “Quick attacks around profile creation,” or “Test multi-user comments, permissions, and race conditions within and out of group membership.”

A tester with the second charter would likely create three accounts, two of which are members of the same group, then send posts that only that group can see, making sure the third member cannot see them. The tester might make a post, see it show up for the second user, and then click delete in one browser and post the comment in the other two seconds later.

Charters are usually focused on a particular piece of the application, such as the shopping cart, checkout, the login/lost password process, or the reporting panel. However, they can also explore a specific user journey or new functionality. It is common for quick overview sessions when companies release a new browser, or just to check and see what it would take to support a browser or tablet.

Using charters can take a vague, black-box process and turn it into a collection of risks managed in this run. However, tracking these results in a shared spreadsheet can become overwhelming and details can be lost. Tracking them in individual documents on a network drive can be even worse. We need a better approach.

How to track your exploratory tests

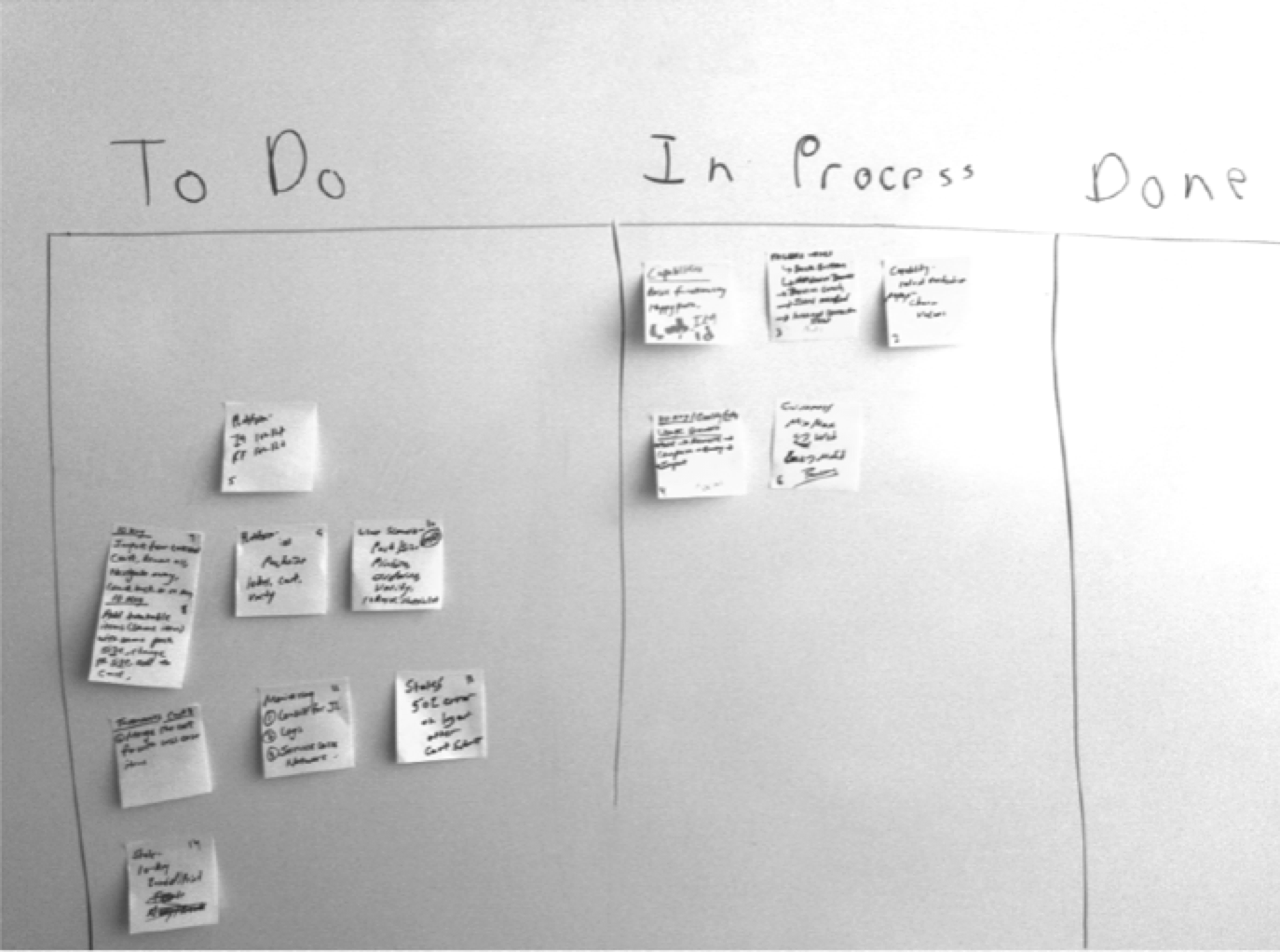

If the team is focused on a single day of intense testing, they can make each charter a sticky note, sort by priority, and then put them in a “Not started” column on a board, adding “Doing” and “Done” columns. At lunch or at the end of the day, the team can review the status to see if the work is good enough.

Source: Live project data, Excelon Development, used with permission. www.xndev.com

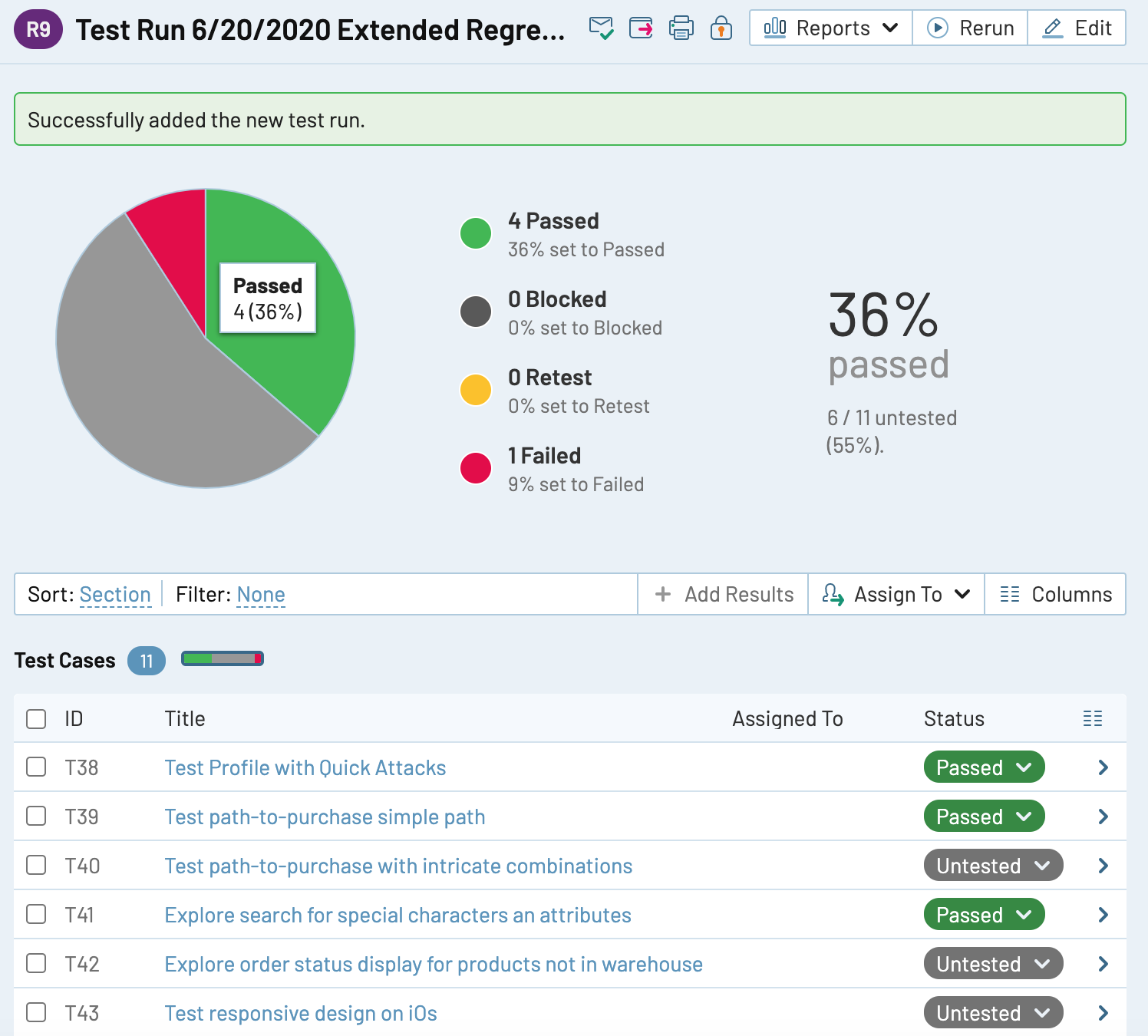

This will work one time for one project, but not for multiple teams. The data captured in the experiment is not tracked; any metrics will be manually counted and stored in a spreadsheet at best. For larger projects, a test case management system can store the charters as test cases.

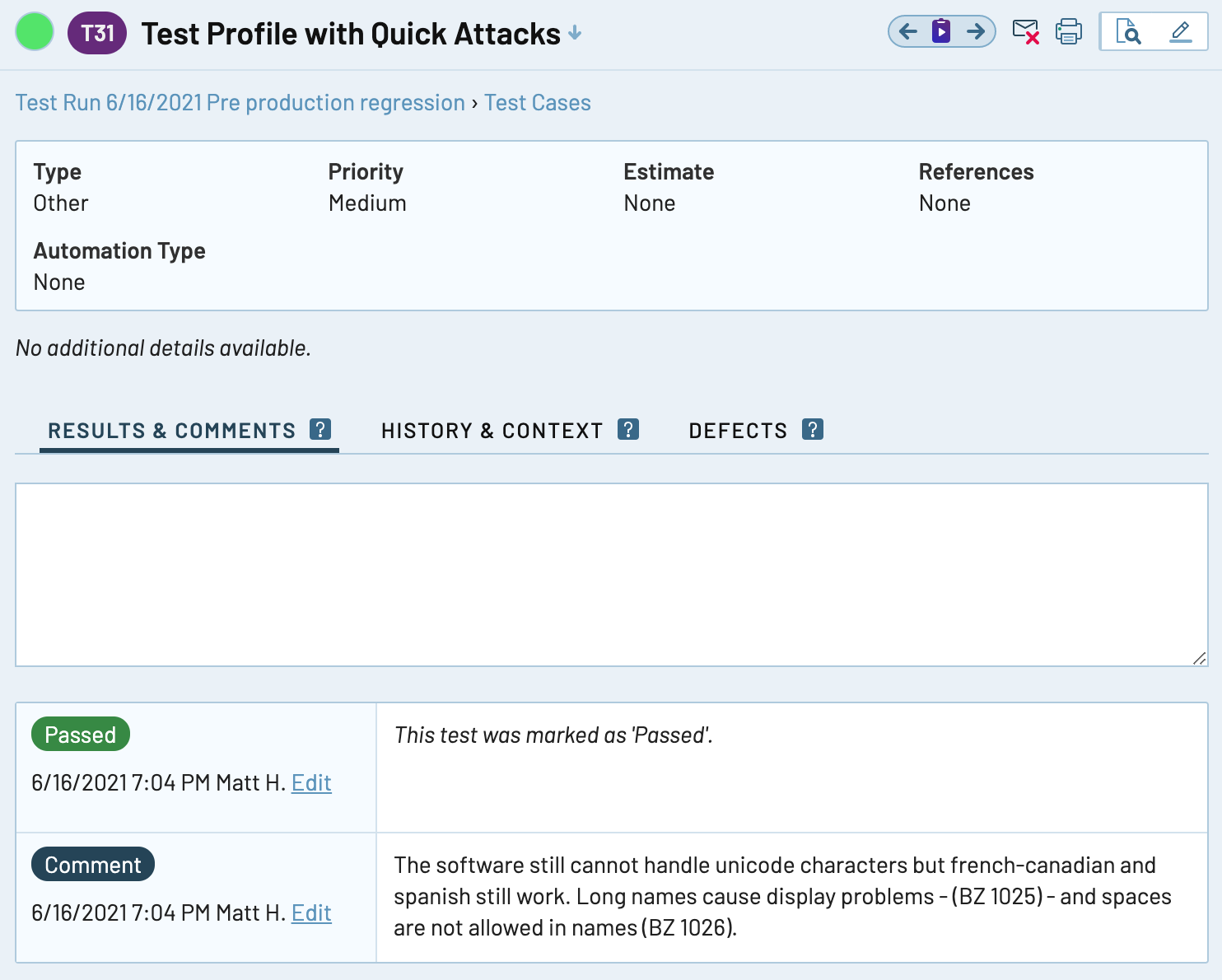

A test run is then a collection of the test cases that are chartered for this regression test or set of new features. The testers can leave comments on the test case in the same place they would for a session, and the software will collect data and make summary reports available on that data. For simpler applications that combine scripted and exploratory testing, the test run might be two dozen scripted test cases and four charters designed to explore specific risks. The example below records a session as a test case.

How to report on exploratory testing

Exploratory tests only “fail” when the tester finds showstopper issues; they are much more focused on uncovering information for decision-makers. The questions involved in managing exploratory testing are more along the lines of “Are we done yet?” and “When can we be done?” The debrief is an often-overlooked information aid in sessions because whoever is acting as a test manager develops a feel for the quality of the application and a holistic view of coverage.

Test case management tools can also provide an excellent view into exploratory testing. By structuring the sessions between releases as “test cases” within a “test run,” which is the release, a leader can scroll down to see what has been covered and still needs to be covered. Check out this article on how you can improve your exploratory testing with a test case management tool!

[/et_pb_text][/et_pb_column]

[/et_pb_row]

[/et_pb_section]