This is a guest post by Nishi Grover Garg.

Test teams are forever designing and adding new tests, running them, and reporting results. But is your test team creating tests that are effective at finding real problems?

How do you know if your tests are actually working, and not just adding to the ever-increasing test count?

Here are some ways you can gauge the effectiveness of your tests — and improve them.

Defects found

The top and most obvious indicator of the effectiveness of your test cases is the defects you find when executing them. As you and your team execute the designed test cases, constantly ask yourself these questions:

- Are these tests guiding me toward defects?

- Am I finding problems with the predefined test cases? Or do I have to do more exploration before even getting close to a problem?

- Are these tests exercising unique flows or use paths?

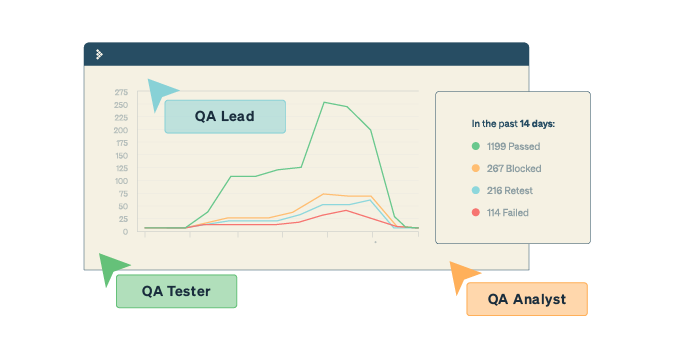

Metrics

You can also look at your defect lists and find related test cases for the defect logged (if you have that ability in your defect management system). This interlinking helps the team understand what test cases led to the issues found.

You can then further analyze whether that test case was created during test design or later added to the list when the issue was found.

History

By looking at your past defects, you can analyze whether the tests that found issues in previous sprints and releases are becoming a part of your new test designs. Techniques that you applied during test execution to further the tests’ effectiveness should guide you to add such tests to your test cases later and make them a habit for the future.

Exploration

If your test cases are not effective, you will not find any useful bugs in test execution. That will mean most of your time is spent in unplanned exploration or ad hoc testing. So, by looking at the time spent in actual test execution versus the time spent on ad hoc testing, you can figure out the effectiveness of the test cases you designed.

If your test cases are effective, you will find issues, explore more use paths, navigate through different integrations with other features, and test different aspects of the same functionality.

If at the end of your test execution, you feel that you have not done all of that, you can infer that is because your test cases might be too simplistic or obvious, and therefore not effective enough to find any useful bugs.

Based on these pointers, you can judge the effectiveness of your test cases.

Nishi is a corporate trainer, an agile enthusiast, and a tester at heart! With 13+ years of industry experience, she currently works with Trifacta as a Community Enablement Manager. She is passionate about training, organizing community events and meetups, and has been a speaker at numerous testing events and conferences. Check out her blog where she writes about the latest topics in Agile and Testing domains.