This is a guest post by Peter G Walen

Metrics and measurements are all around us. They inform and are tracked in every part of our lives, from our commute to work to how people perceive the economy is behaving. When used properly they are a great help to our understanding of nearly everything. Why then, do people working in software struggle with measurements? Is it the metric itself we are struggling with, or are we reacting to how metrics are used?

Metrics and measurement have been a factor in the workplace from time immemorial. Which worker turned out the most units in a day or a week. Which worker had the most mistakes. Taylor’s “Scientific Management” theory was built on precisely those types of metrics. Since the late 1970s, metrics at work have gotten more and more sophisticated. The mid to late 1980s saw the arrival of affordable desktop computers for homes and offices. Spreadsheet applications also proliferated.

They were amazing things with loads of interesting data points to draw on and reference. Anyone could load data into a spreadsheet, manipulate it, sort it, select difference ranges and make impressive reports, graphs and scatter plots and trend analysis. They represented making each person with access to a spreadsheet a statistician.

People had access to massive numbers of data points without much understanding of the validity of the data, its source, and logical useful application.

When I started in software, the “LOC” – Lines of Code measure was a commonly used tool to measure programmers’ productivity. Thousand Lines of Code (KLOC) was used to express the complexity of systems. This, in turn, leads to things like Defects per KLOC for large systems, or number of Defects per some variation of that for the work produced by each programmer.

They were easy to measure. They were available. They were commonly used in many development organizations. They also played a huge part in shaping my view of Performance Metrics applied to the individual, team or group.

The Problem with Management of Software Metrics

When used as an information gathering device, metrics can show areas that are functioning well, or poorly. They can help highlight areas needing attention and deeper investigation. They can also help people form meaningful questions around specific areas of interest.

Many companies focus on things easy to count, like LOC or KLOC or bugs per KLOC and the number of bugs found in production vs the bugs found in testing. These are easy to get and can help guide training and organizational improvement.

There is nothing wrong with that as a goal.

The challenge comes when managers are tempted to look for “high performers” or apply performance standards based on these ideas. Things like bug counts, test cases written, test steps executed, and failure rates may be interesting information points. They are less likely to be meaningful individual performance measures.

Why would that be? Consider this scenario I have seen played out time and again.

A company wants to reward top-performing developers and testers. They implement a new performance metric program rewarding developers by the number of lines coded each week and testers by the number of test cases executed and the number of bugs recorded each week.

The metrics have shifted from informational to behavioral measures. Instead of being measured to help guide training and other improvements, they are part of an individual, reward-based program.

Thus, someone helping others solve a coding problem, or detect and describe a bug will not get credit for that – because the measure is code produced or bugs detected, by each person. Teamwork and collaboration will negatively impact performance ratings.

At the same time, if credit is given for the number of bugs, then loads of simple, trivial bugs will be written up. Code will be produced in volume, often not of very good quality.

Facts, Measurements & Behavior

A study published in Science Magazine looks at communication, opinion, beliefs and how they can be influenced, in some cases over very long terms, by a fairly simple technique: open communication and honest sharing.

The results of the study were notable. It showed that people tend to be influenced to the point of changing opinions and views on charged, sensitive topics, after engaging in non-confrontational, personal, anecdotal-based conversation.

With sensitive topics, arguments on “proven facts” does little to bring about a change in perception or understanding.

Facts don’t matter

Well-reasoned, articulate, fact-based arguments often do little to convince people of pretty much anything. They may “agree” with you so you will go away, but they have not been convinced.

Instead, consider this: Emotions have a greater impact on people’s beliefs and decision-making processes than most people want to believe.

Release Metrics

Bugs: A fair number of shops, large or small, have a rule something like “No software will be released to production with known Critical or High bugs.” It is possible you have encountered something like this. It is amazing how quickly a High bug becomes a Medium bug and the fix gets bumped to the next release if there is a “suitable” work-around for that.

When I encounter that, I wonder “Suitable to whom?” Sometimes I ask flat out what is meant by “suitable.” Sometimes, I smile and chalk that up to the emotion.

Dev/Code Complete: Another favorite is “All features in the release must be fully coded and deployed to the Test Environment {X} days (or weeks) before the release date. All code tasks (stories) will be measured against this at the quality of the release will be compared against the percentage of stories done of all stories tasks in the release.” What?

That is really hard for me to say aloud and is kind of goofy in my mind. Rules like this make me wonder what has happened in the past to have such strict guidelines in place. I can understand wanting to make sure there are no last-minute code changes going in. I have also found changing people’s behaviors tends to work better by using the carrot – not a bigger stick to hit them with.

Bugs Found in Testing: There is a fun mandate that gets circulated sometimes. “The presence of bugs found in the Test Environment indicates Unit Testing was inadequate.” Hoo-boy. It might indicate that unit testing was inadequate. It might also indicate something far more complex and difficult to address by demanding “more testing.”

Possible Alternatives

Saying “These are bad ideas” may or may not be accurate. They may be the best ideas visible to those establishing the measurements. They may not have any idea on how to make them better.

Partly, this is the result of people with glossy handouts explaining to software executives how “best practices” will work to eliminate bugs in software and eliminate release night/weekend disasters. Of course, the game there is that these “best practices” only work if the people with the glossy handouts are doing the training and giving lectures and getting paid large amounts of money to make things work.

And when they don’t, more times than not the reason presented is because the company did not “follow the process correctly” or is “learning the process.” Of course, if the organization tries to follow the consultant’s model based on the preliminary conversations, the effort is doomed to failure and will lead to large amounts of money going to the consultant anyway.

What if…

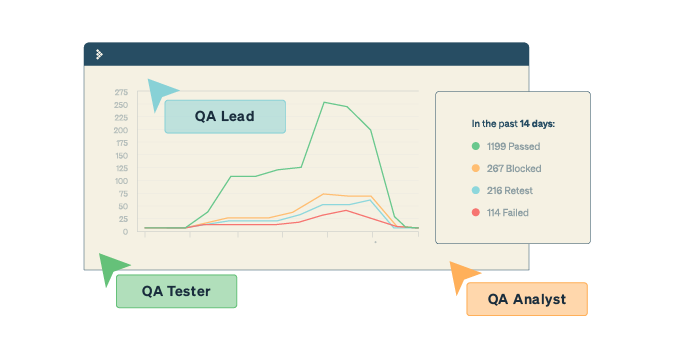

A practice I encountered several years ago was enlightening. I was working on a huge project as a QA Lead. Each morning we had a brief touch point meeting of project leadership (development leads and managers, QA Lead, PM, other boss-types) discussing what was the goal for the day in development and testing.

As we were coming close to the official implementation date, a development manager proposed a “radical innovation.” At the end of one of the morning meetings, he went around the room asking the leadership folks how they felt about the state of the project. I was grateful because I was pushing hard to not be the gatekeeper for the release or be the Quality Police.

How he framed the question of “state of the project” was interesting – “Give a letter grade for how you think the project is going where ‘A’ is perfect and ‘E’ is doomed.” Not surprisingly, some of the participants said “A – we should go now, everything is great…” A few said “B – pretty good but room for improvement…” A couple said “C – OK, but there are a lot of problems to deal with.” Two of us said “D – there are too many uncertainties that have not been examined.”

Later that day, he and I repeated the exercise in the project war-room with the developers and testers actually working on the project. The results were significantly different. No one said “A” or “B”. A few said “C”. Most said “D” or “E”.

The people doing the work had a far more negative view of the state of the project than the leadership did. Why was that?

The leadership was looking at “Functions Coded” (completely or in some state of completion) and “Test Cases Executed” and “Bugs Reported” and other classic measures.

The rank-and-file developers and testers were more enmeshed in what they were seeing – the questions that were coming up each day that did not have an easy or obvious answer; the problems that were not “bugs” but were weird behaviors and might be bugs; a strong sense of dread of how long it was taking to get “simple, daily tasks” figured out.

The Result?

Management had a fit. Gradually, the whiteboards in the project room were covered with post-its and questions written in colored dry-erase markers. Management had a much bigger fit.

Product owner leadership was pulled in to weigh in on these “edge cases” which led to IT management having another fit. The testers were raising legitimate questions. When the scenarios were being explained to the bosses of people actually using the software, they tried it. And sided with the testers and the developers: There were serious flaws.

We reassessed the remaining tasks and worked like maniacs to address the problems uncovered. We delivered the product some two months late – but it worked. Everyone involved, including the Product Owner leadership who were now regularly in the morning meetings, felt far more comfortable with the state of the software.

Lessons

The “hard evidence” and metrics and facts all pointed to one conclusion. The “feelings” and “emotions” and “beliefs” pointed to another.

In this case, following the emotion-based decision path was correct.

Counting bugs found and fixed in the release was interesting, but did not give a real measure of the readiness of the product. Likewise, counting test cases executed gave a rough idea of progress in testing and did nothing at all to look at how the software actually functioned for the people really using it.

Can fact-based metrics really give us the information we need to make hard decisions?

Peter G. Walen has over 25 years of experience in software development, testing, and agile practices. He works hard to help teams understand how their software works and interacts with other software and the people using it. He is a member of the Agile Alliance, the Scrum Alliance and the American Society for Quality (ASQ) and an active participant in software meetups and frequent conference speaker.