Most of the companies I have worked with have a “definition of done” to tell when they are ready to release.

After all the feature work is done — or most of it, at least — we do some sort of regression testing. When the test team has finished their regression pass and reported all the bugs they discovered, we do a triage to put the bugs in order of priority, and development fixes the problems that were categorized as critical and high — basically, there can be no showstopper bugs. We do rounds of fixing, building, and retesting until all those issues are addressed. Once we make it through the triaged bug list, a final build is prepared (we called this a release candidate build, back in the old days), and we run a smoke test against that build. If we don’t find anything too surprising in the smoke test, it’s time to go to production.

My current project uses a more modern approach, and we can’t define whether a piece of work is done based on handoffs alone. So, how can we tell when something is ready to go to production, when boundaries in the flow of work are difficult to see?

Get TestRail FREE for 30 days!

Test Coverage and Gut Feelings

I’m working in a project that does XP (Extreme Programming) for most changes. Nearly every feature change that goes into production was worked on by two developers and a test specialist, all at the same time. Rather than the typical requirements gathering and bug-fixing flow of “refinement, development, testing” that is defined by roles on the team, everything happens within the dev-test triple until the change is ready to ship.

Here is how we know it’s time to go to production.

We don’t always test-drive, but we are always deliberate about the automation we build. Out product can be sliced up in a number of ways: model/view/controller, API/user interface, server code and front-end code, etc. At each point during development, we are asking what the most appropriate way to test a piece of code is and where in the technology stack that test should go. In the end, we might end up with specs or Jasmine (we use a lot of Ruby and JavaScript) against several different layers of the product.

One interesting inflection point is building tests against a view. Sometimes, we find that we are spending a lot of time trying to recreate actions that might take place in a browser. This is usually the point when we decide to build that test in WebDriver to run against a browser rather than building it to run against the view code.

This series of automated tests helps with code design, partly by telling us when a new line of code is done when we test-drive, and partly because these tests act as an early alert system that will tell us if something has gone wrong during a refactor. These tests are small, concise and very useful, but also shallow and not representative of what a person does when they use the software.

We balance this with exploration. At various points during the flow of development, someone on the triple will build and deploy a new container. For each build, we assess what the recent changes were and where the good and bad of that change might manifest in the user interface, then we explore to find risks and problems.

When our tests are all green and the three of us have explored the change to address needed test coverage that automation cannot provide (as well as fix the problems we find), we are done with our side of the work. There is one more important step before we get to production, though.

Continuous Demonstration: The Other CD

We have a series of grooming meetings before each sprint begins. Our product managers gather groups of related change requests and then arrange time with a developer and tester, though not necessarily the people who will be working on that change. We start with talking about what the customer needs and listing a handful of acceptance criteria.

After the meeting we have a more refined perspective about what the customer is looking for, as well as clearer acceptance criteria, and sometimes we end up finding ways to break up the card and deliver smaller pieces of the change at a time. The aim of these grooming meetings is to reduce the amount of ambiguity when development starts. We can never be 100 percent clear ahead of time — this is software, after all — but we should do our best.

Immediately before development begins, we have a brief, so called, Three Amigos meeting. The developers and test specialists who will be working on the card meet with a product manager to go over the finer details of what needs to be done and whom they are doing it for. Sometimes we will make modifications to the Jira card, but usually we use this meeting to add context to what we will be working on for the next day or three.

After our normal flow of development and testing, we have a demo for the stakeholders. Usually the product managers or in-house staff will present the changes we worked on. We merge our feature branch with the main development branch, create a new container with that code, and deploy that container to a production-like environment. The point of all this is to get as close to what the customers might experience as possible. Someone who worked on the change will walk through the change, as well as any possible problems customers might encounter, and the stakeholders ask questions about specific scenarios. When the changes are more technical, such as an API change or change to how an internal library works, the demo is given to the development and ops team.

The flow I described is a sandwich of demonstration. In the beginning, we demonstrate and refine our understanding of the change we made, and after feature development seems done, we demonstrate our understanding of that change with software. Having a flow of demonstration means that anyone, at any point in the process, can take a look and say, “This isn’t right; we aren’t quite done yet.”

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

The Cult of the Green Bar, and Other Myths

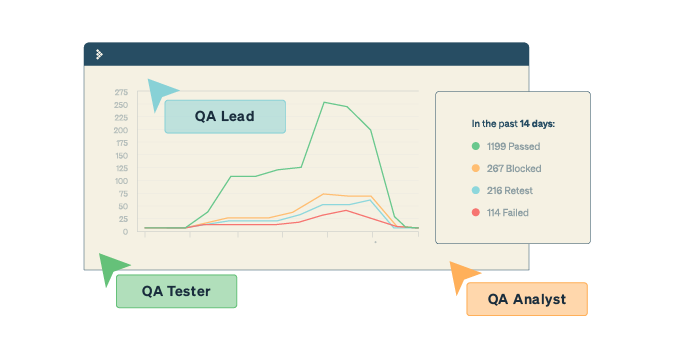

I occasionally hear references to something called the “cult of the green bar,” which is when people talk about projects that do continuous delivery or pairing. The sentiment here is that some companies rely so heavily on automation that the human element of testing and exploration is removed. These developers write product code, then write test harnesses and test code, and as soon as they see a green bar indicating continuous integration, they push code to production. To get real cult status, they don’t even look at CI; new code is just automatically pushed to production as soon as all tests on all builds are green.

The thing I don’t see talked about as much it the sophisticated architecture that makes this possible. Every feature has an on/off flag so that it can be disabled nearly instantly if there is a problem. Builds can be rolled back seamlessly, and there are monitoring, observing and reporting on each layer of the product. However, there are only a handful of very big companies that make enough money and service enough customers that can afford this sort of architecture.

What I see much more often is the human-centric approach I described above that balances automation, exploration and demonstration.

Getting to Done

Defining done in a modern software development context, such as pair programming, will probably focus on appropriate automated test coverage that passes consistently, exploration of more complex scenarios, and a last-minute demonstration to the stakeholders before a release. Each piece of this flow finds important problems before they are in production, and helps us build as close to the right thing the first time around as possible. And the important part: We do all of this without pausing between roles in development.

How do you know when you are ready to go to production?

This is a guest posting by Justin Rohrman. Justin has been a professional software tester in various capacities since 2005. In his current role, Justin is a consulting software tester and writer working with Excelon Development. Outside of work, he is currently serving on the Association For Software Testing Board of Directors as President helping to facilitate and develop various projects.

Test Automation – Anywhere, Anytime