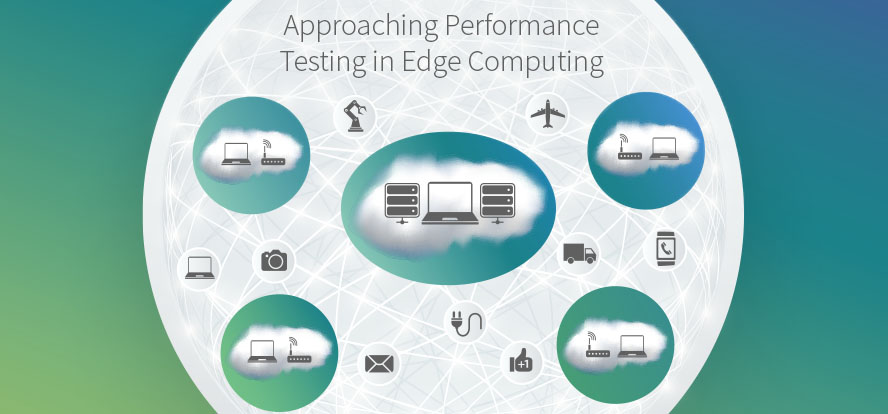

Edge computing is about moving computational intelligence as close to the edge of a network as possible. Essentially, in the past, “frontline” devices gathered data passively and sent the information onto a target endpoint for processing. Under edge computing, some processing is done within the frontline device itself, and the result of the computation is moved onto other processing targets within the network.

Edge computing is a transformational approach to how distributed applications works on the internet. But, as with any new technique, there are challenges. This is particularly true when it comes to performance testing within an edge computing ecosystem.

Understanding these challenges is useful, particularly now that edge computing is becoming a more prevalent part of the internet. With this new paradigm, we will need to devise new ways of approaching performance testing.

Get TestRail FREE for 30 days!

Understanding Edge Computing

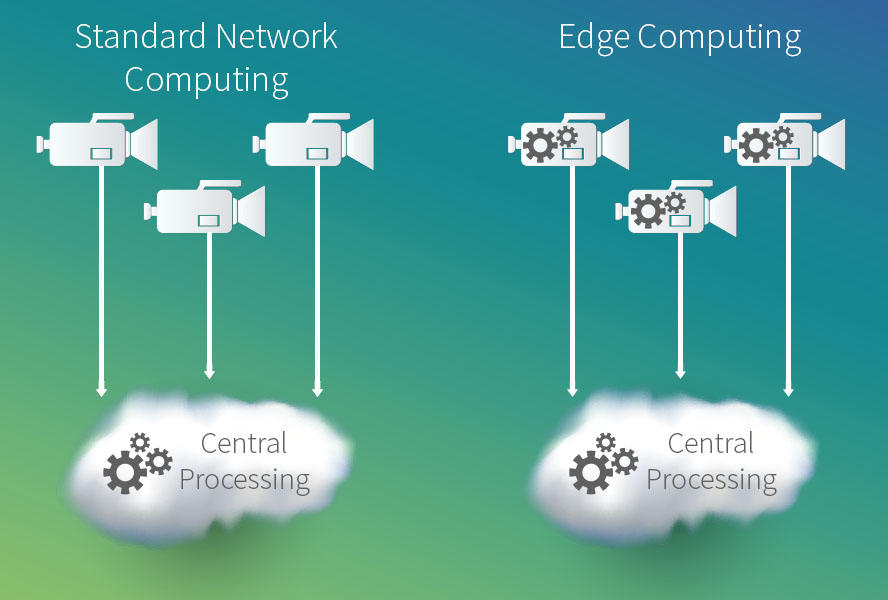

One of the easiest ways to understand edge computing is to consider a video camera connected to the internet. Before edge computing came along, the video camera simply captured all visual activity occurring before its lens. Each frame of video was converted into a chunk of data that was sent over the network in a stream. The camera was on all the time, streaming data back to a server, regardless of whether there was any motion occuring. Clearly, a good deal of data having marginal use was being passed over the network, eating away at bandwidth.

In an edge computing paradigm, intelligence is put into the video camera so it can identify motion and then stream data back to the server only when motion occurs (figure 1). Such an approach is highly efficient because only valuable data gets back to server for processing.

Figure 1: In edge computing, input devices on the network are given intelligence to do analysis and make decisions

This sort of computing is now becoming the norm. All the security video cameras in the building where I live are motion-sensitive: The video camera is smart enough to detect motion and save only the video stream for the given time-span of motion. This means that the only time that data is saved is when there is activity around the building. The camera also timestamps the video when saving the stream, so we don’t have to go through hours of viewing to find an event of interest. We can just browse through recordings of time stamped video segments. A process that used to take hours is now done in minutes.

Now apply the concepts behind edge computing to other types of devices connected to the internet of things. Take a fitness device, for example. A fitness tracker has the ability to monitor the heartbeat of the person wearing it. Applying the principles of edge computing, we can make it so that when the wearer’s heartbeat stops, the device automatically contacts an EMT service for medical assistance directly. There is no delay incurred due to the latency created, should a backend server be the sole point of computation intelligence. Rather, the edge device is making the call for help immediately, saving time in a life-or-death situation. Such an example is extreme, but it does provide profound insight about the power and benefit of edge computing.

Edge computing is an important technology. If nothing else, it will cut down on the amount of useless data that gets passed around the internet. But, as with any technology, in order to be viable, it must be testable. And this is where the challenges of edge computing scenarios emerge.

Performance Testing at the Edge

Computing requires both software and hardware. Over the years hardware has become so abstract that we now treat a lot of it as software — thus, infrastructure as code. As such, a good deal of our performance testing treats the underlying hardware as a virtualized layer that is consistent and predictable. For the most part, it is, provided you’re dealing with the types of hardware that live in a data center. But things get difficult when we move outside the data center.

Take cellphones, for example. Most cellphone manufacturers provide software that emulates the physical device. Software developers use these emulators to write and test code. It’s a lot easier for a developer to write code using an emulator than to have to be constantly deploying code to a physical device every time a line of code is changed.

Using emulators speeds up the process of writing and testing applications that run cellphones. But at some point, someone, somewhere is going to need to test that software on a real cellphone. To address this issue, companies set up cellphone testing farms: A company will buy every cellphone known to man and put them in a lab. The lab is configured to allow a tester to declare the physical phone that the given software under test will be installed and run on. (Some companies have automated the process, and others even provide cellphone farms as a service.) In this case, the challenge is conducting large-scale performance testing on disparate physical devices.

The same cannot be said about IoT devices such as fitness trackers, household appliances and driverless vehicles. These devices are positioned to be an important part of the edge computing ecosystem, but presently, the emulation capabilities required to conduct adequate performance testing of software intended to run on these devices are limited — or, in some cases, nonexistent. And there is still the problem that at some point somebody is going to have to load the software onto a piece of the physical hardware in order to run the performance tests required.

Given that most modern performance testing is a continuous, automated undertaking, when it comes to performance testing in an edge computing environment, it seems as as if we’ve gone back to the Stone Age.

Join 34,000 subscribers and receive carefully researched and popular article on software testing and QA. Top resources on becoming a better tester, learning new tools and building a team.

How to Move Forward

Traditionally, companies buy hardware in order to run software, so most performance testing today is primarily focused on testing software. However, in the world of edge computing, companies buy the appliance, which is a combination of both hardware and software. In this case, performance testing is focused on the appliance. It’s a different approach to testing — one that still requires a good deal of time and labor to execute.

If the history of software development has shown us anything, it’s that at some point, automation in general — and test automation in particular — must prevail. But in order for automation to prevail in the edge computing ecosystem, a higher degree of standardization must be achieved among IoT devices on an industry-wide basis.

In other words, the way your tests interface with a driverless vehicle should not be that different from the way the tests interact with an internet-aware refrigerator. Yes, you might be testing different features and capabilities, but the means of integration must be similar. Think of it this way: Monitoring the cooling system of a driverless vehicle should not be that different from monitoring the cooling system of an internet-aware refrigerator.

Solving the problem of working disparate devices in a common way is nothing new. At one time the way a printer connected to a computer was completely different from the way you connected a keyboard or mouse. Now, USBs provide the common standard. The same thing needs to happen with edge computing devices.

Creating a universal standard for emulating IoT devices, as well as for physically accessing any device via automation, are the hidden challenges in edge computing when it comes to performance testing. But meeting these challenges is not insurmountable; unifying device standardization is a challenge we’ve met before and one we’re sure to meet again. The company that meets the challenge this time may very well set the standard for performance testing in the edge computing ecosystem for a long time to come.

Article by Bob Reselman; nationally-known software developer, system architect, industry analyst and technical writer/journalist. Bob has written many books on computer programming and dozens of articles about topics related to software development technologies and techniques, as well as the culture of software development. Bob is a former Principal Consultant for Cap Gemini and Platform Architect for the computer manufacturer, Gateway. Bob lives in Los Angeles. In addition to his software development and testing activities, Bob is in the process of writing a book about the impact of automation on human employment. He lives in Los Angeles and can be reached on LinkedIn at www.linkedin.com/in/bobreselman.

Test Automation – Anywhere, Anytime